一、k8s上部署kubesphere

1.1 基于原有k8s集群部署kubesphere

1.1.1 搭建nfs

1.1.2 创建StorageClass

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 172.31.0.4 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /nfs/data ## nfs服务器共享的目录

volumes:

- name: nfs-client-root

nfs:

server: 172.31.0.4

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

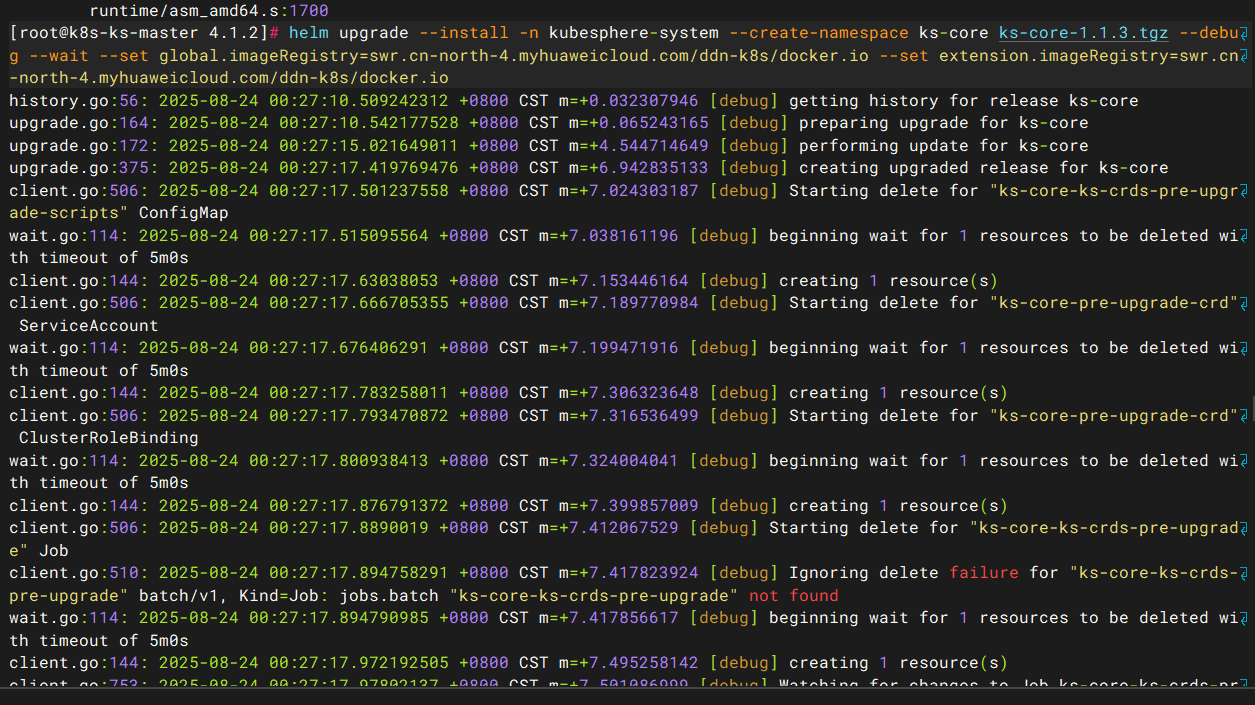

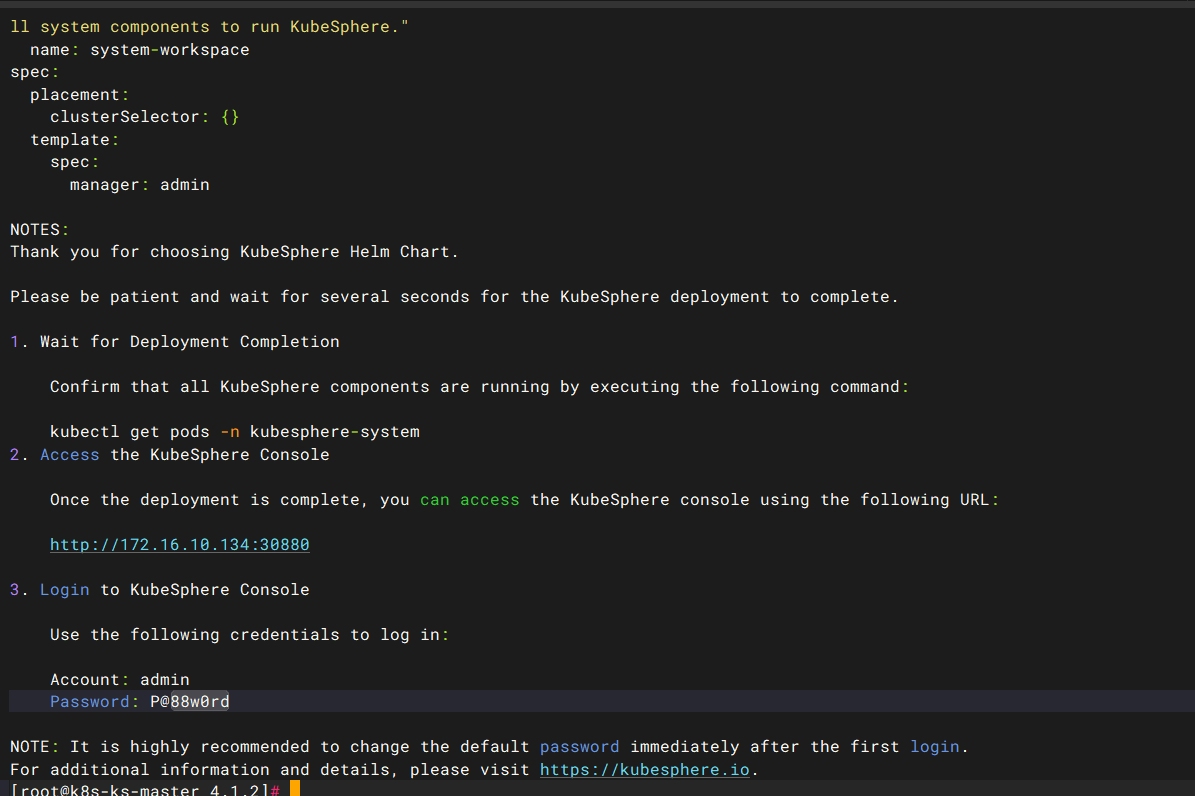

1.1.3 helm部署kubesphere

PS:创建nfs sc,参考本文1.1章节的1.1.1和1.1.2小节内容。不再赘述

安装核心组件 KubeSphere Core

# 如果无法访问 charts.kubesphere.io, 可将 charts.kubesphere.io 替换为 charts.kubesphere.com.cn

helm upgrade --install -n kubesphere-system --create-namespace ks-core ks-core-1.1.3.tgz --debug --wait \

--set global.imageRegistry=swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io \

--set extension.imageRegistry=swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io \

--set hostClusterName=k8s-1334

1.1.4 yaml部署旧版kubesphere3.4.1

1.1.5 创建kubesphere-installer.yaml

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusterconfigurations.installer.kubesphere.io

spec:

group: installer.kubesphere.io

versions:

- name: v1alpha1

served: true

storage: true

scope: Namespaced

names:

plural: clusterconfigurations

singular: clusterconfiguration

kind: ClusterConfiguration

shortNames:

- cc

---

apiVersion: v1

kind: Namespace

metadata:

name: kubesphere-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ks-installer

namespace: kubesphere-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ks-installer

rules:

- apiGroups:

- ""

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apps

resources:

- '*'

verbs:

- '*'

- apiGroups:

- extensions

resources:

- '*'

verbs:

- '*'

- apiGroups:

- batch

resources:

- '*'

verbs:

- '*'

- apiGroups:

- rbac.authorization.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiextensions.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- tenant.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- certificates.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- devops.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.coreos.com

resources:

- '*'

verbs:

- '*'

- apiGroups:

- logging.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- jaegertracing.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- admissionregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- policy

resources:

- '*'

verbs:

- '*'

- apiGroups:

- autoscaling

resources:

- '*'

verbs:

- '*'

- apiGroups:

- networking.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- config.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- iam.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- notification.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- auditing.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- events.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- core.kubefed.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- installer.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- security.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.kiali.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- kiali.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- networking.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- kubeedge.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- types.kubefed.io

resources:

- '*'

verbs:

- '*'

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: ks-installer

subjects:

- kind: ServiceAccount

name: ks-installer

namespace: kubesphere-system

roleRef:

kind: ClusterRole

name: ks-installer

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

app: ks-install

spec:

replicas: 1

selector:

matchLabels:

app: ks-install

template:

metadata:

labels:

app: ks-install

spec:

serviceAccountName: ks-installer

containers:

- name: installer

image: kubesphere/ks-installer:v3.1.1

imagePullPolicy: "Always"

resources:

limits:

cpu: "1"

memory: 1Gi

requests:

cpu: 20m

memory: 100Mi

volumeMounts:

- mountPath: /etc/localtime

name: host-time

volumes:

- hostPath:

path: /etc/localtime

type: ""

name: host-time

1.1.6 创建cluster-configuration.yaml

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.1

spec:

persistence:

storageClass: "" # If there is no default StorageClass in your cluster, you need to specify an existing StorageClass here.

authentication:

jwtSecret: "" # Keep the jwtSecret consistent with the Host Cluster. Retrieve the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster.

local_registry: "" # Add your private registry address if it is needed.

etcd:

monitoring: true # Enable or disable etcd monitoring dashboard installation. You have to create a Secret for etcd before you enable it.

endpointIps: 172.31.0.4 # etcd cluster EndpointIps. It can be a bunch of IPs here.

port: 2379 # etcd port.

tlsEnable: true

common:

redis:

enabled: true

openldap:

enabled: true

minioVolumeSize: 20Gi # Minio PVC size.

openldapVolumeSize: 2Gi # openldap PVC size.

redisVolumSize: 2Gi # Redis PVC size.

monitoring:

# type: external # Whether to specify the external prometheus stack, and need to modify the endpoint at the next line.

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 # Prometheus endpoint to get metrics data.

es: # Storage backend for logging, events and auditing.

# elasticsearchMasterReplicas: 1 # The total number of master nodes. Even numbers are not allowed.

# elasticsearchDataReplicas: 1 # The total number of data nodes.

elasticsearchMasterVolumeSize: 4Gi # The volume size of Elasticsearch master nodes.

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention time in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log.

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true # Enable or disable simultaneous logins. It allows different users to log in with the same account at the same time.

port: 30880

alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.

enabled: true # Enable or disable the KubeSphere Alerting System.

# thanosruler:

# replicas: 1

# resources: {}

auditing: # Provide a security-relevant chronological set of records,recording the sequence of activities happening on the platform, initiated by different tenants.

enabled: true # Enable or disable the KubeSphere Auditing Log System.

devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image.

enabled: true # Enable or disable the KubeSphere DevOps System.

jenkinsMemoryLim: 2Gi # Jenkins memory limit.

jenkinsMemoryReq: 1500Mi # Jenkins memory request.

jenkinsVolumeSize: 8Gi # Jenkins volume size.

jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters.

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.

enabled: true # Enable or disable the KubeSphere Events System.

ruler:

enabled: true

replicas: 2

logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.

enabled: true # Enable or disable the KubeSphere Logging System.

logsidecar:

enabled: true

replicas: 2

metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler).

enabled: false # Enable or disable metrics-server.

monitoring:

storageClass: "" # If there is an independent StorageClass you need for Prometheus, you can specify it here. The default StorageClass is used by default.

# prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and providing high availability.

prometheusMemoryRequest: 400Mi # Prometheus request memory.

prometheusVolumeSize: 20Gi # Prometheus PVC size.

# alertmanagerReplicas: 1 # AlertManager Replicas.

multicluster:

clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the Host or Member Cluster.

network:

networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).

# Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net.

enabled: true # Enable or disable network policies.

ippool: # Use Pod IP Pools to manage the Pod network address space. Pods to be created can be assigned IP addresses from a Pod IP Pool.

type: calico # Specify "calico" for this field if Calico is used as your CNI plugin. "none" means that Pod IP Pools are disabled.

topology: # Use Service Topology to view Service-to-Service communication based on Weave Scope.

type: none # Specify "weave-scope" for this field to enable Service Topology. "none" means that Service Topology is disabled.

openpitrix: # An App Store that is accessible to all platform tenants. You can use it to manage apps across their entire lifecycle.

store:

enabled: true # Enable or disable the KubeSphere App Store.

servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology.

enabled: true # Base component (pilot). Enable or disable KubeSphere Service Mesh (Istio-based).

kubeedge: # Add edge nodes to your cluster and deploy workloads on edge nodes.

enabled: true # Enable or disable KubeEdge.

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress: # At least a public IP address or an IP address which can be accessed by edge nodes must be provided.

- "" # Note that once KubeEdge is enabled, CloudCore will malfunction if the address is not provided.

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

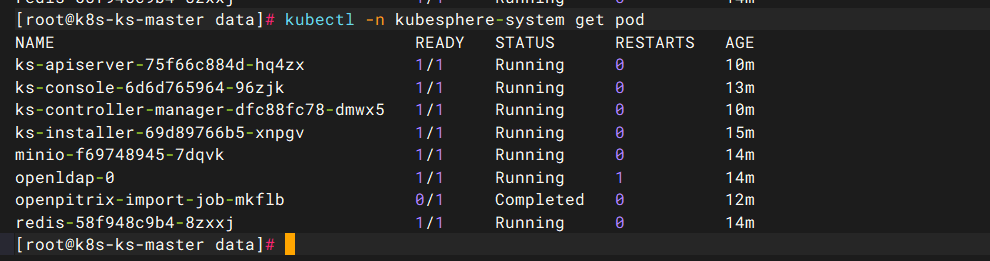

查看pod运行情况

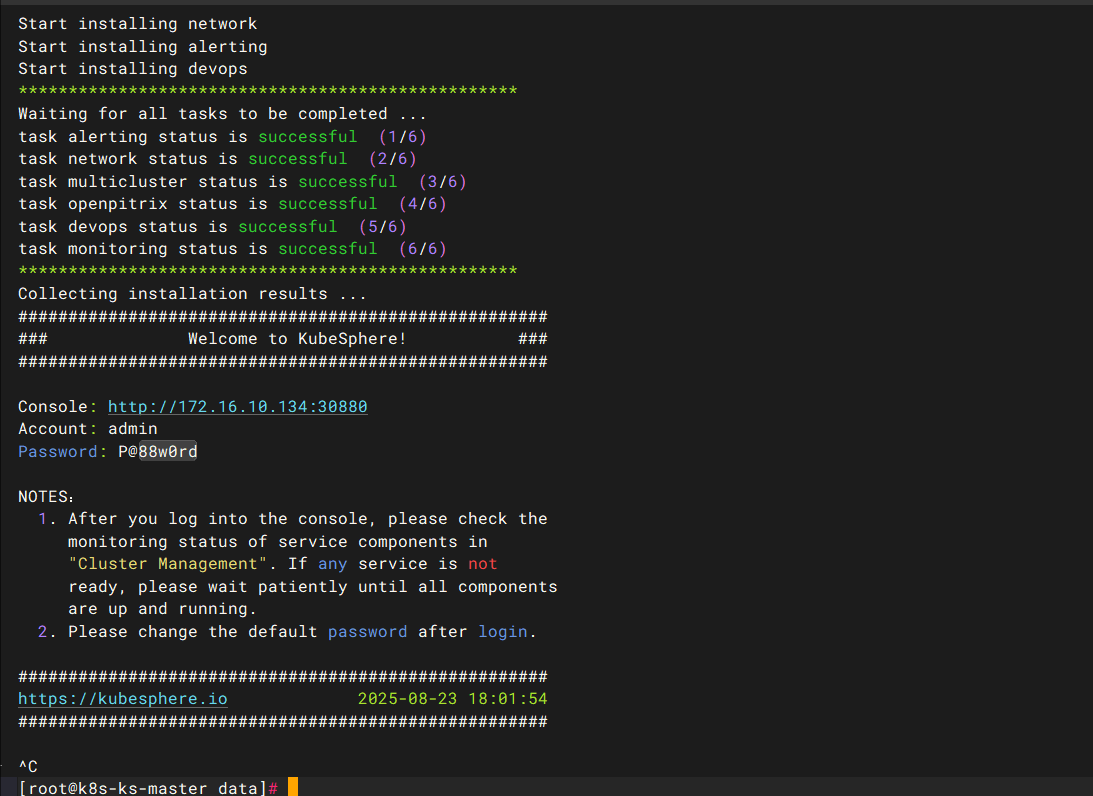

1.1.5 查看面板账密登录

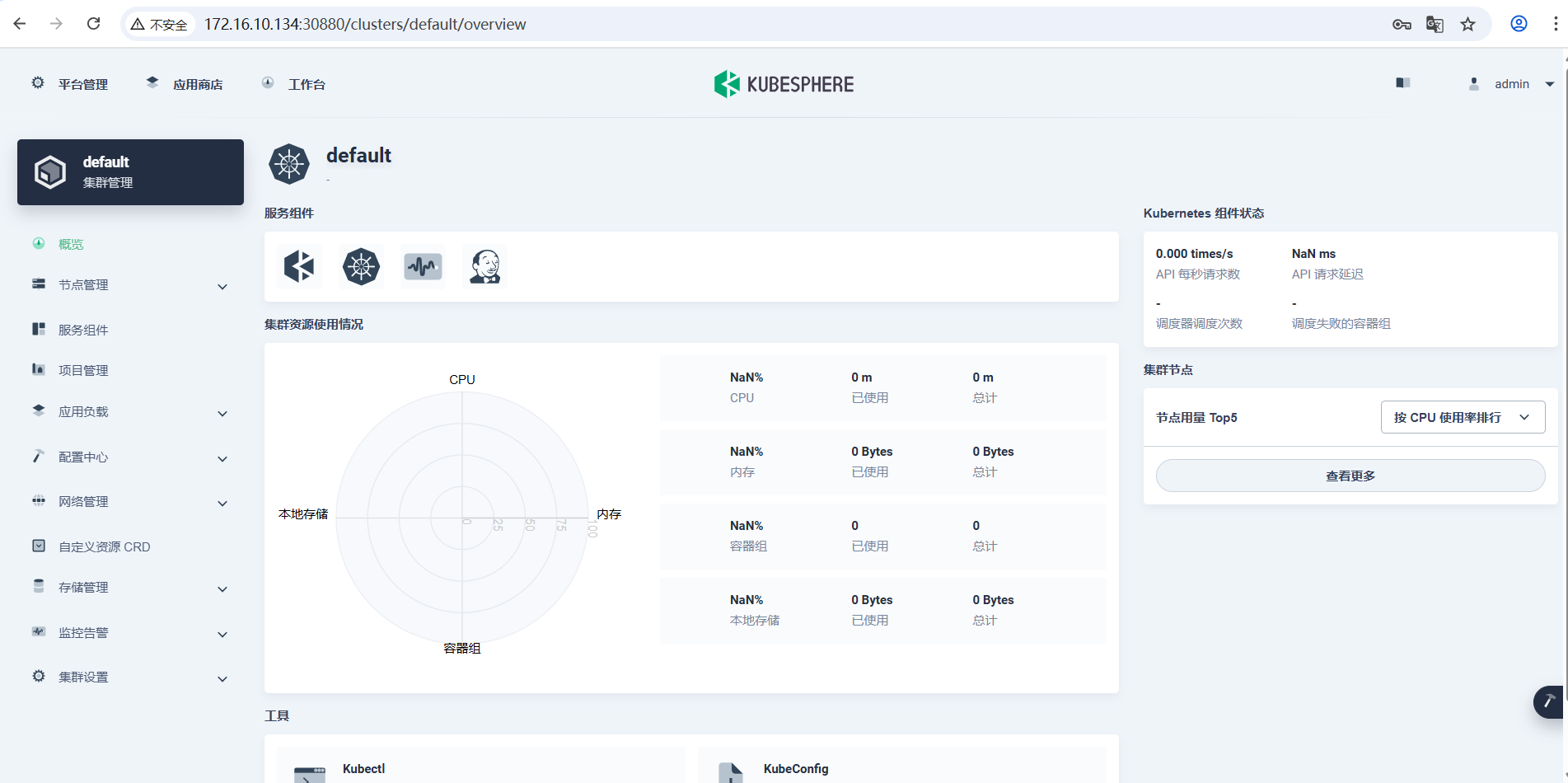

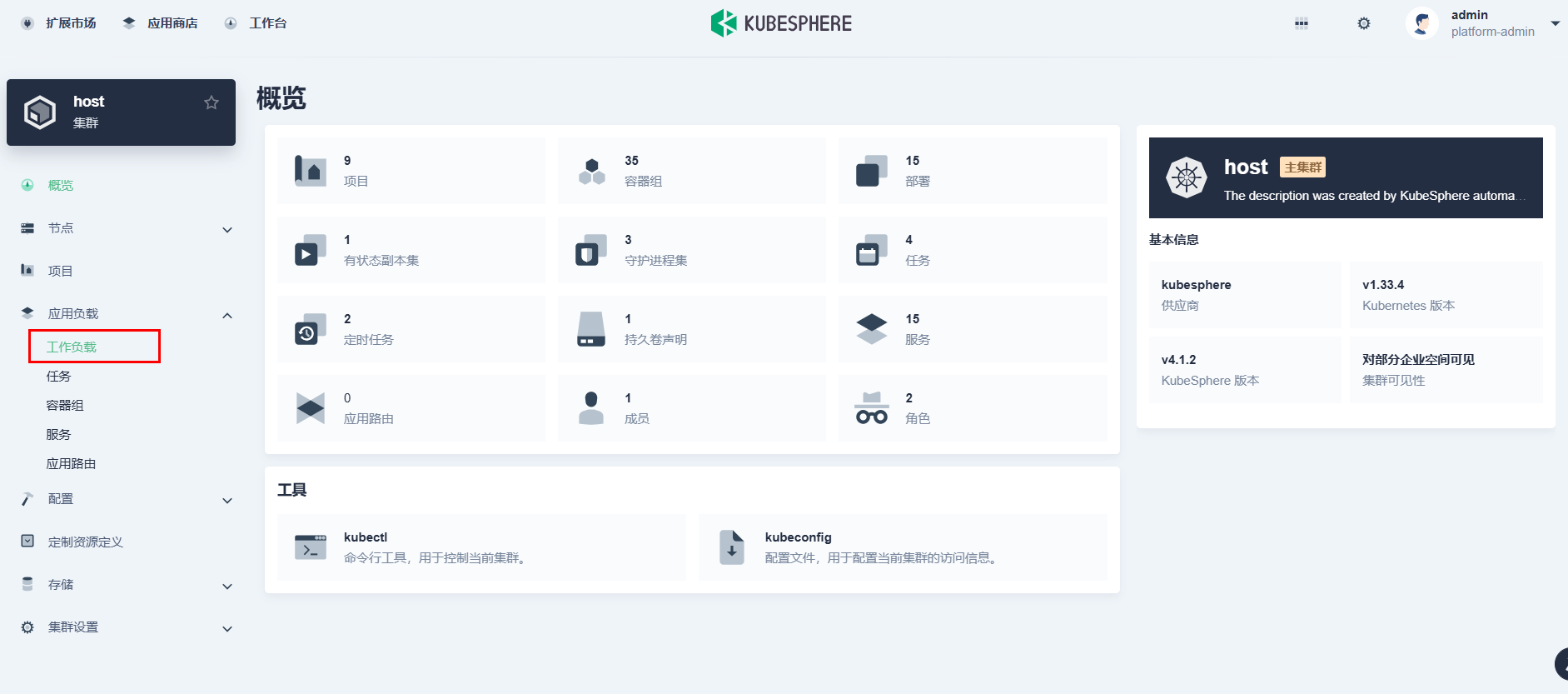

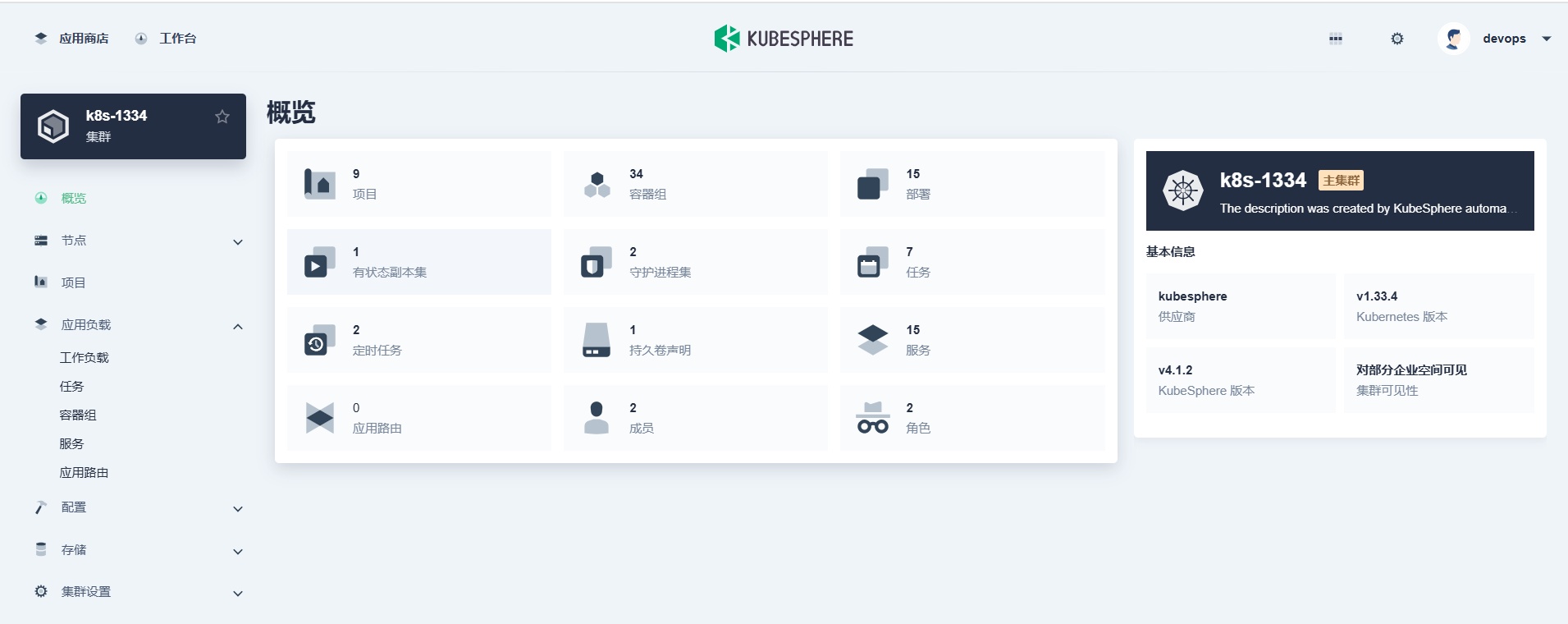

最后修改登录密码,成功进入到kubesphere管理页面

1.2 基于kubekey部署k8s集群+kubesphere4.1.2

1.2.1 下载kubekey

# 选择中文区下载(访问 GitHub 受限时使用)

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | sh -

1.2.2 查看 KubeKey 支持的 Kubernetes 版本列表

./kk version --show-supported-k8s

1.2.3 创建 Kubernetes 集群部署配置

本文选择了最新的 v1.33.4。因此,指定配置文件名称为 ksp-k8s-v1334.yaml,如果不指定,默认的文件名为 config-sample.yaml

./kk create config -f ksp-k8s-v1334.yaml --with-kubernetes v1.33.4

1.2.4 修改配置文件

- hosts:指定节点的 IP、ssh 用户、ssh 密码

- roleGroups:指定 32个 etcd、1个control-plane 节点,2个 worker 节点

- internalLoadbalancer: 启用内置的 HAProxy 负载均衡器

- domain:自定义域名 特殊需求可使用默认值 lb.kubesphere.local

- clusterName:自定义集群名 没特殊需求可使用默认值 cluster.local

- autoRenewCerts:该参数可以实现证书到期自动续期,默认为 true

- containerManager:容器运行时使用 containerd

- storage.openebs.basePath:默认没有,新增配置,指定 openebs 默认存储路径为 /data/nfs-data/kubesphere

- registry.privateRegistry:可选配置, 解决 Docker 官方镜像不可用的问题

- registry.namespaceOverride: 可选配置, 解决 Docker 官方镜像不可用的问题

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: k8s-ks-master, address: 172.16.10.134, internalAddress: 172.16.10.134, user: root, password: "123456"}

- {name: k8s-ks-node1, address: 172.16.10.135, internalAddress: 172.16.10.135, user: root, password: "123456"}

- {name: k8s-ks-node2, address: 172.16.10.136, internalAddress: 172.16.10.136, user: root, password: "123456"}

roleGroups:

etcd:

- k8s-ks-node1

- k8s-ks-node2

control-plane:

- k8s-ks-master

worker:

- k8s-ks-node1

- k8s-ks-node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.33.4

clusterName: cluster.local

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: "registry.cn-beijing.aliyuncs.com"

namespaceOverride: "kubesphereio"

registryMirrors: []

insecureRegistries: []

addons: []

storage:

openebs:

basePath: /data/nfs-data/kubesphere

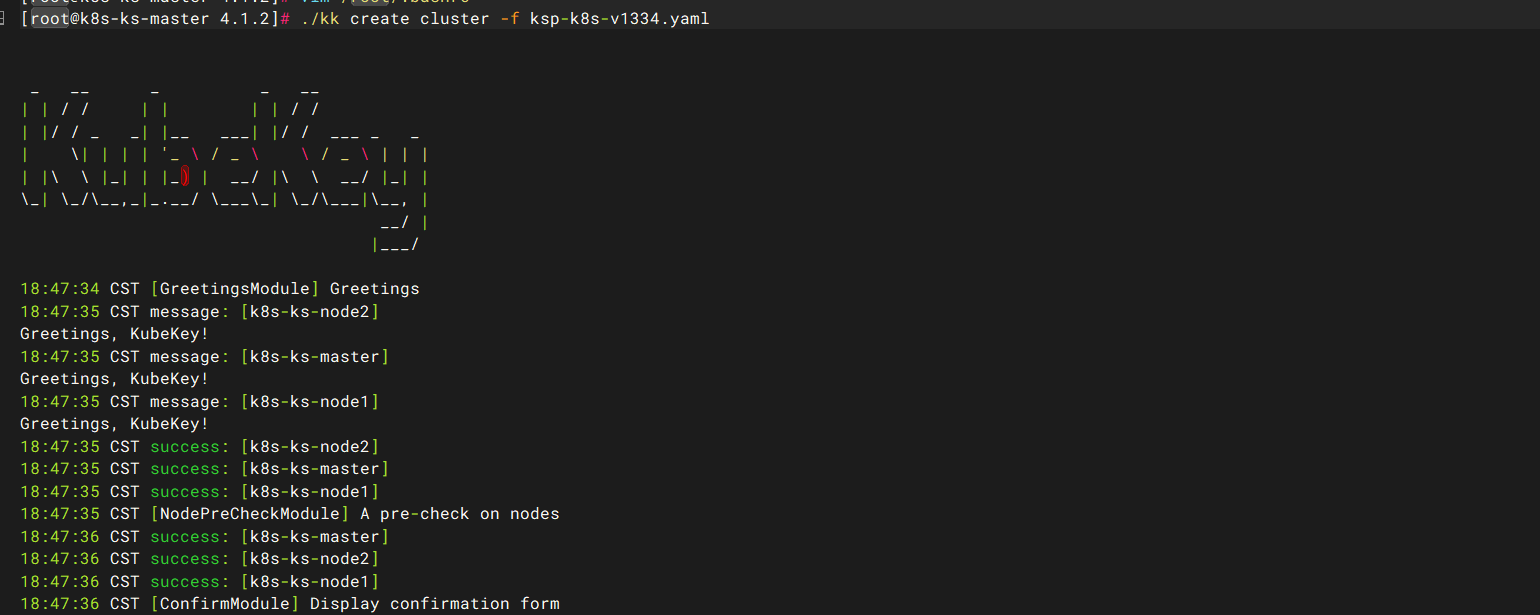

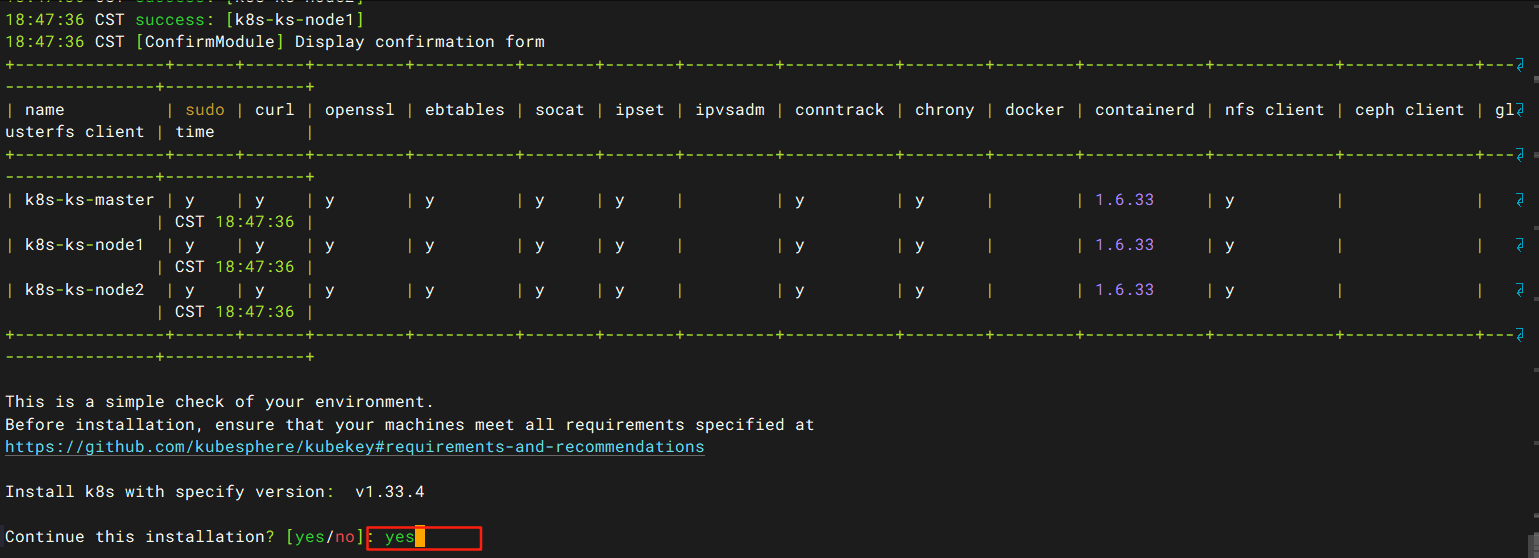

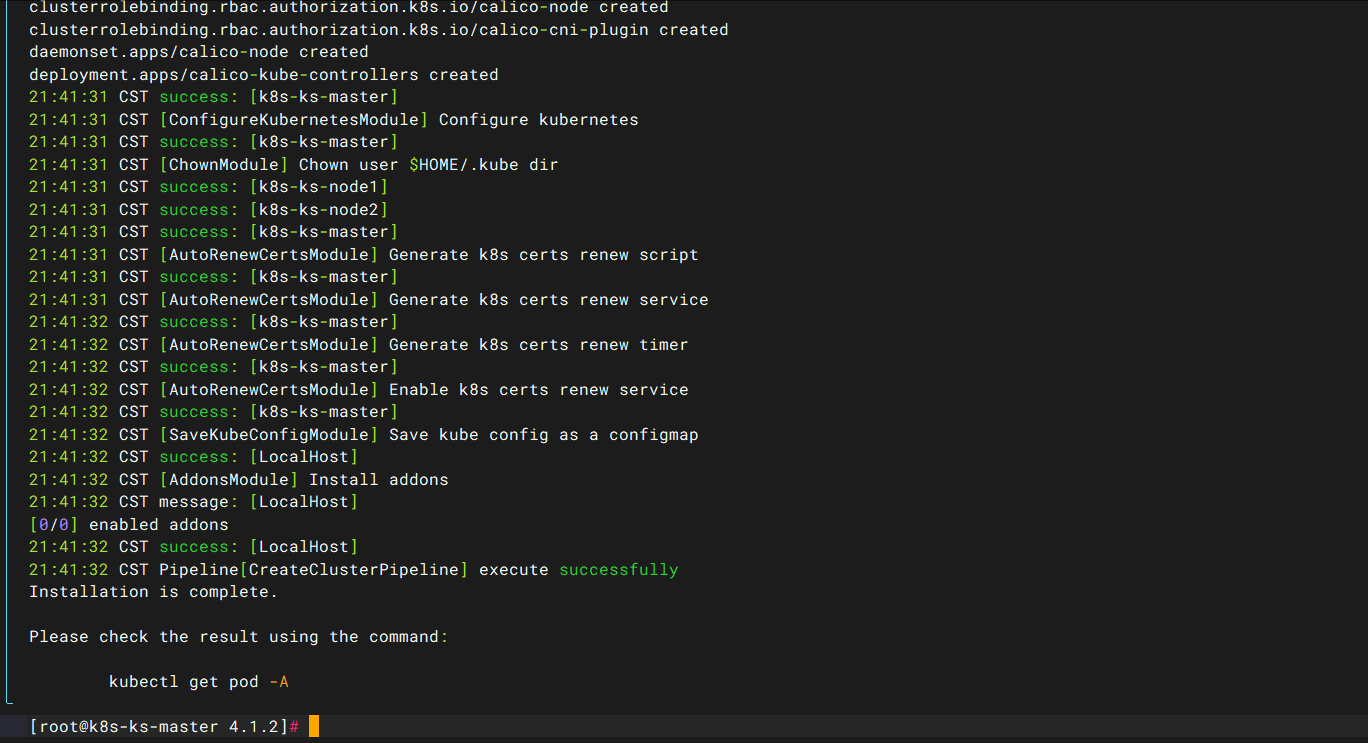

1.2.5 部署k8s

./kk create cluster -f ksp-k8s-v1334.yaml

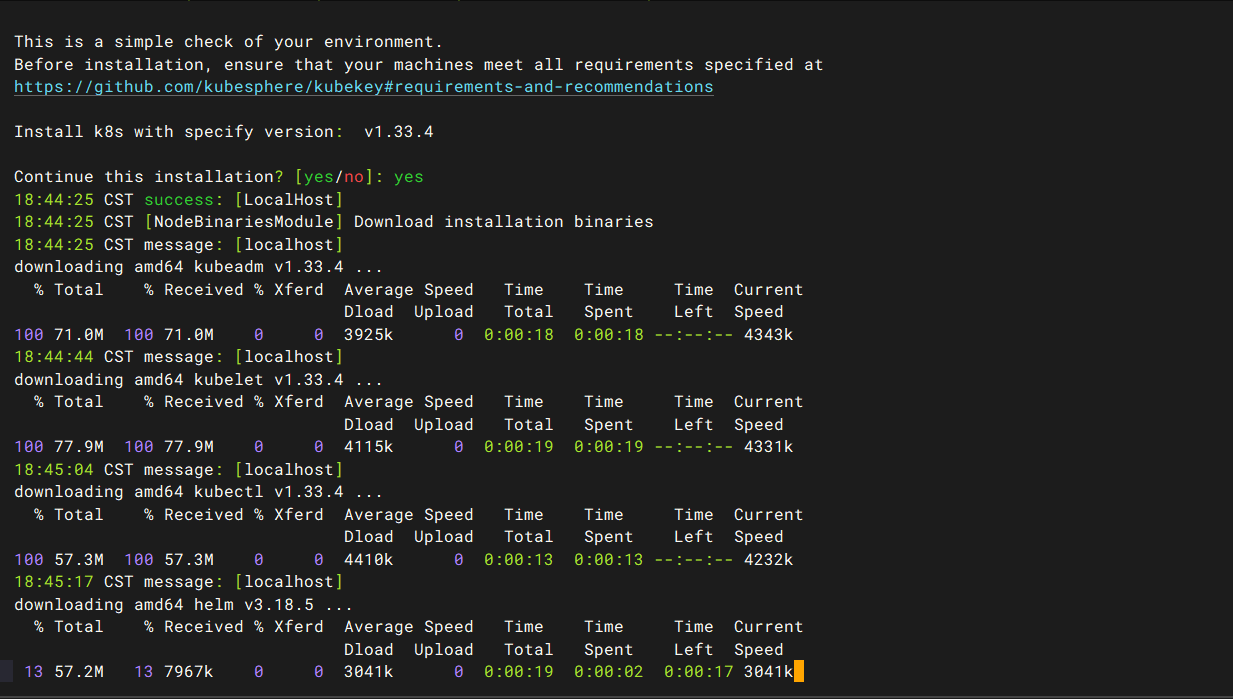

接着会安装集群,部署根据实际网速,时间不一

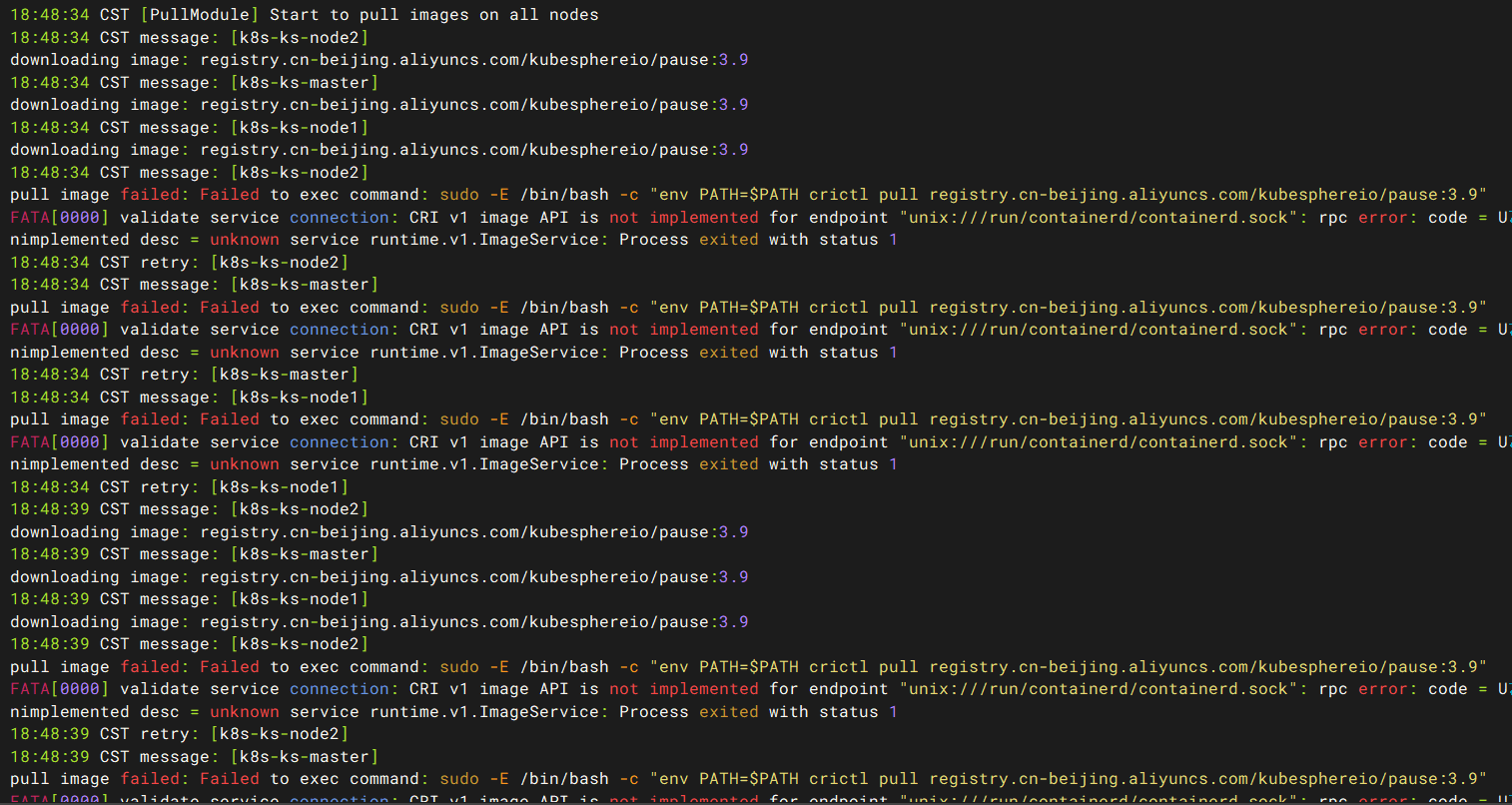

有个鸡肋的地方,crictl不能正常拉取镜像,原因是kk配置有问题,需要手动修复containerd配置文件

# 备份原配置(如果存在)

mv /etc/containerd/config.toml /etc/containerd/config.toml.bak

# 生成默认配置

mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

# 编辑vim /etc/containerd/config.toml 配置 systemd cgroup 驱动(关键!)

SystemdCgroup = true

#重启containerd

systemctl restart containerd

#接下来再次执行部署指令

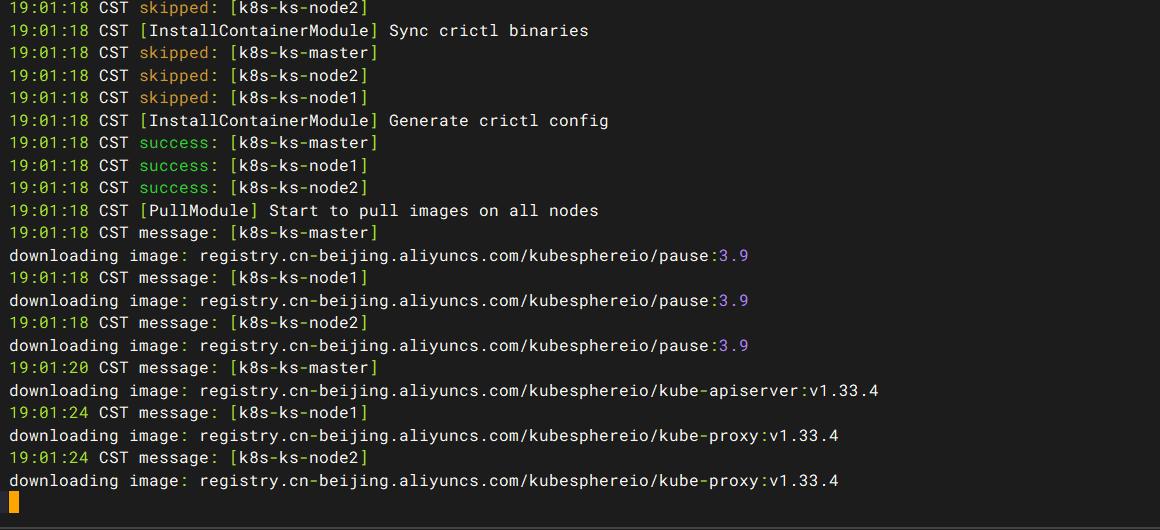

./kk create cluster -f ksp-k8s-v1334.yaml

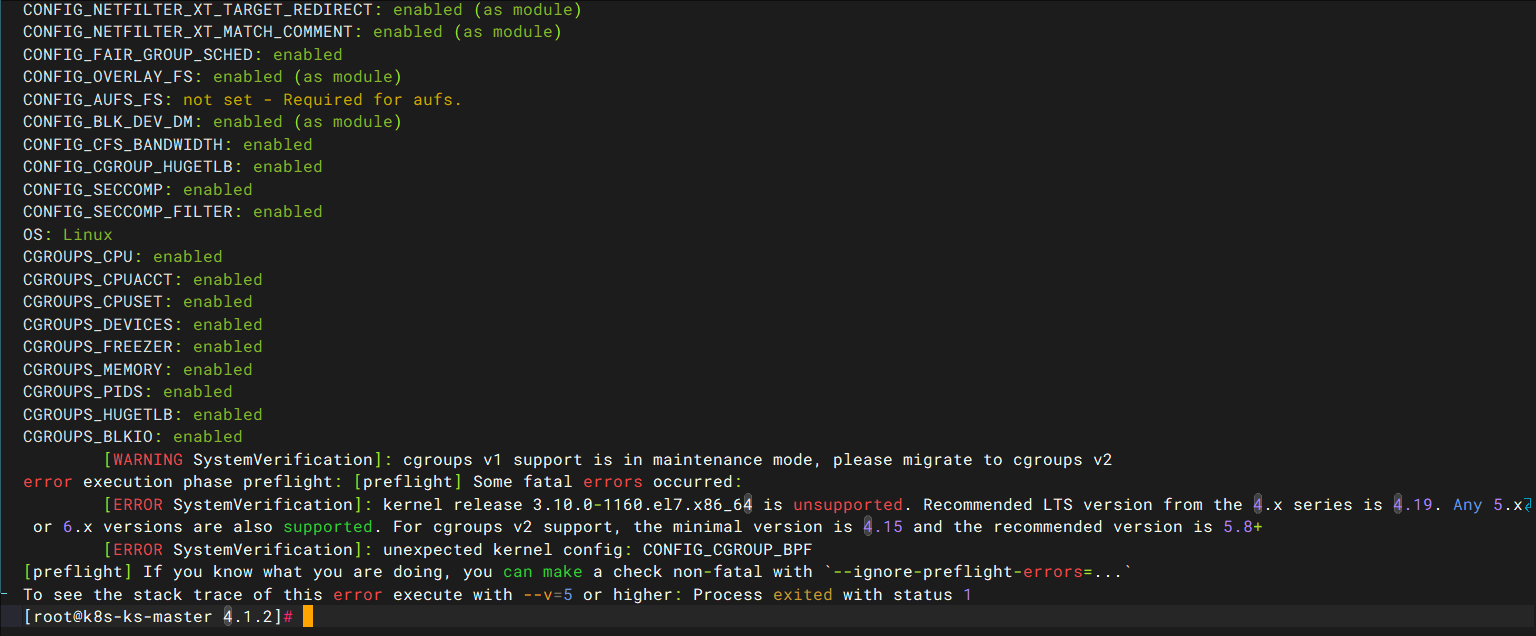

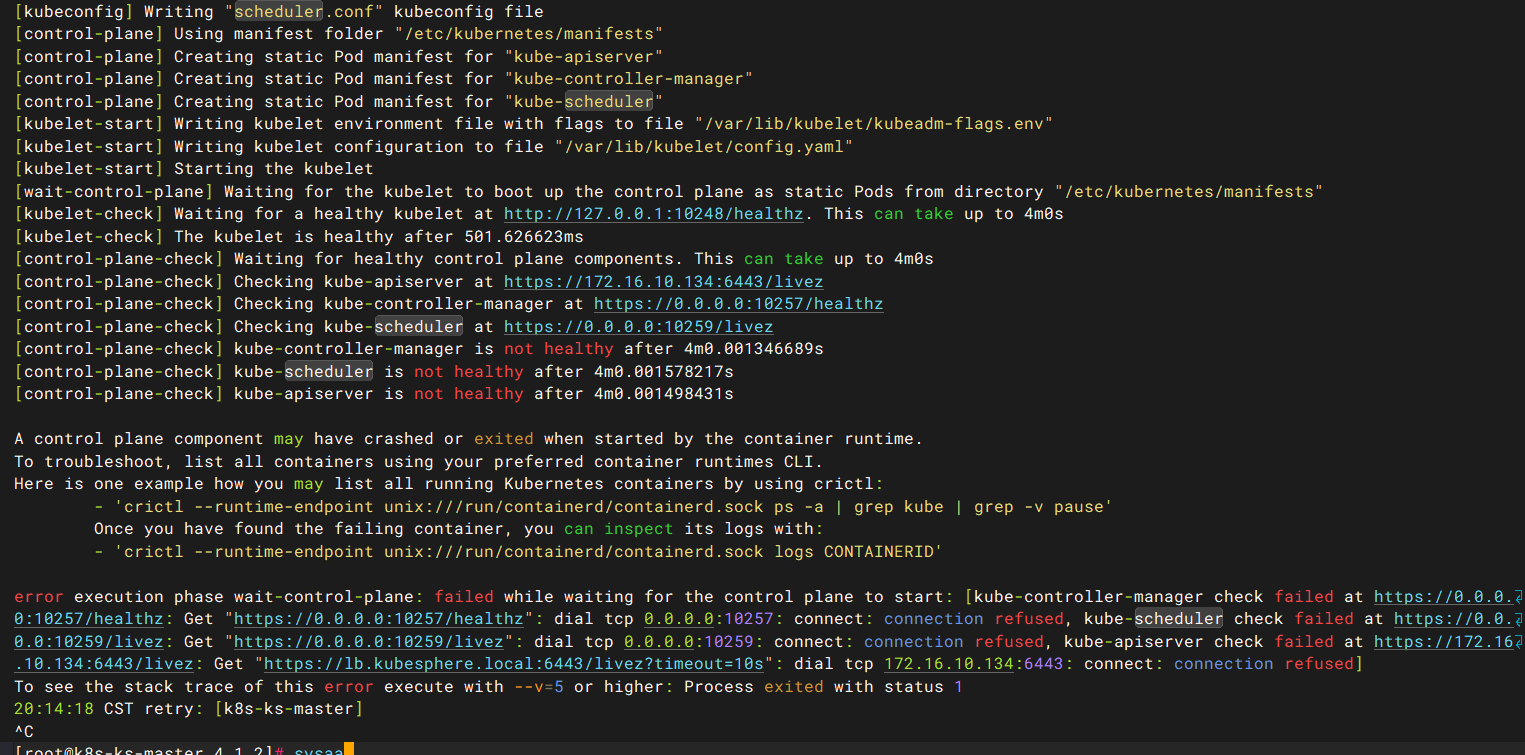

好景不长,到这里又报错了。。

原因是centos7.9的默认内核不支持该集群。只能升级内核

升级内核步骤如下

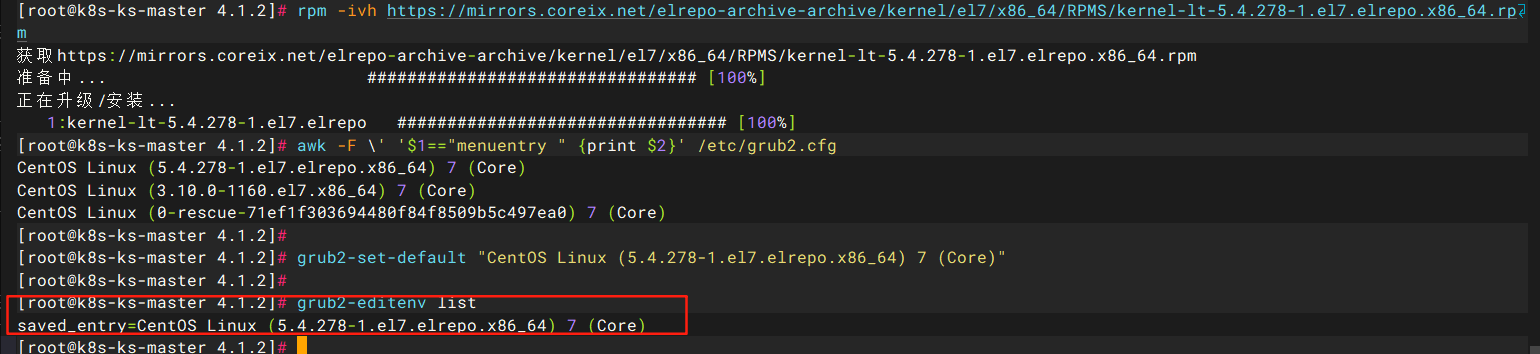

# 1. 备份原有源

mv /etc/yum.repos.d/elrepo.repo /etc/yum.repos.d/elrepo.repo.bak

#选择新版内核,并通过rpm方式安装新内核

rpm -ivh https://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-lt-5.4.278-1.el7.elrepo.x86_64.rpm

#查看内核列表

awk -F \' '$1=="menuentry " {print $2}' /etc/grub2.cfg

#设置Linux启动时默认选择新内核

grub2-set-default "CentOS Linux (5.4.278-1.el7.elrepo.x86_64) 7 (Core)"

grub2-editenv list

#重启生效

reboot

现在又有新的报错

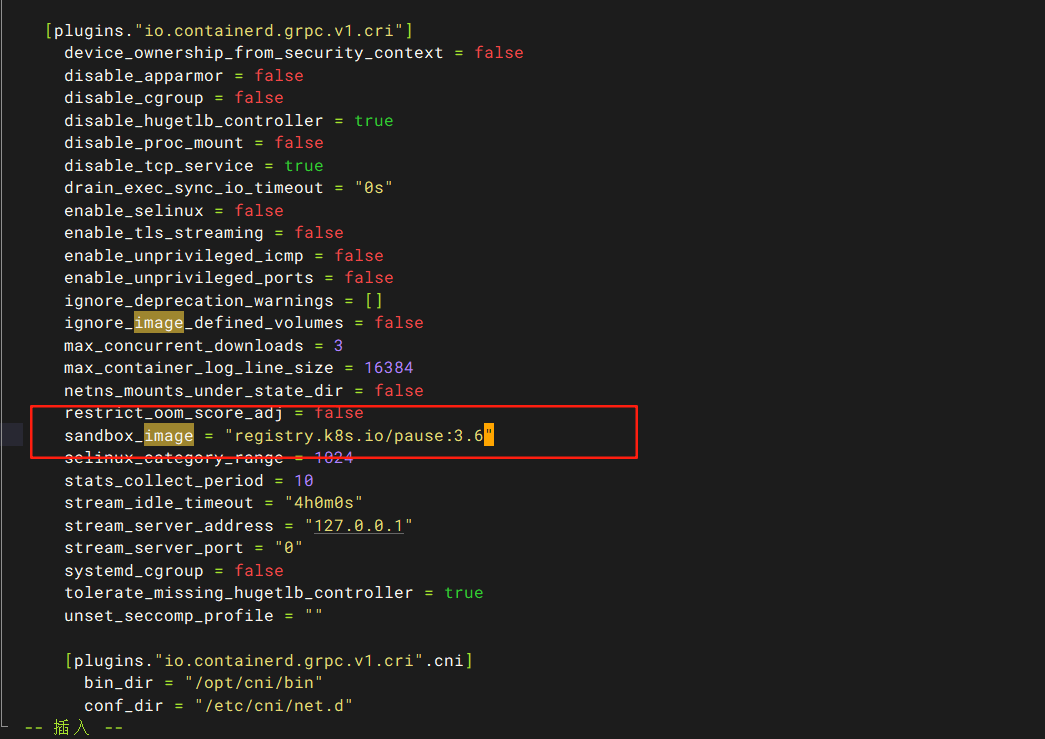

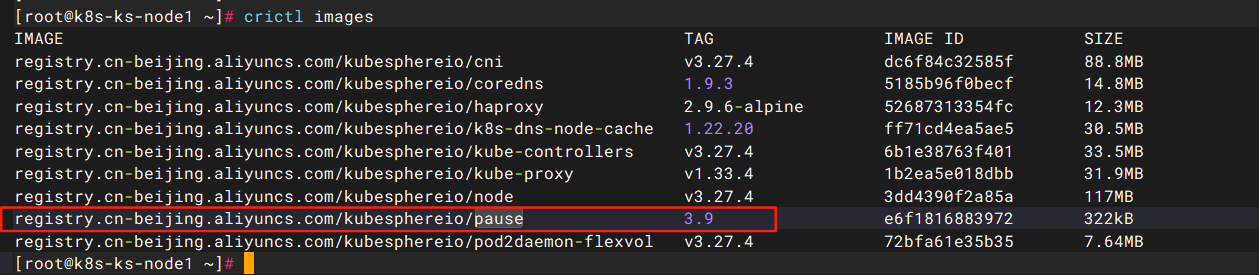

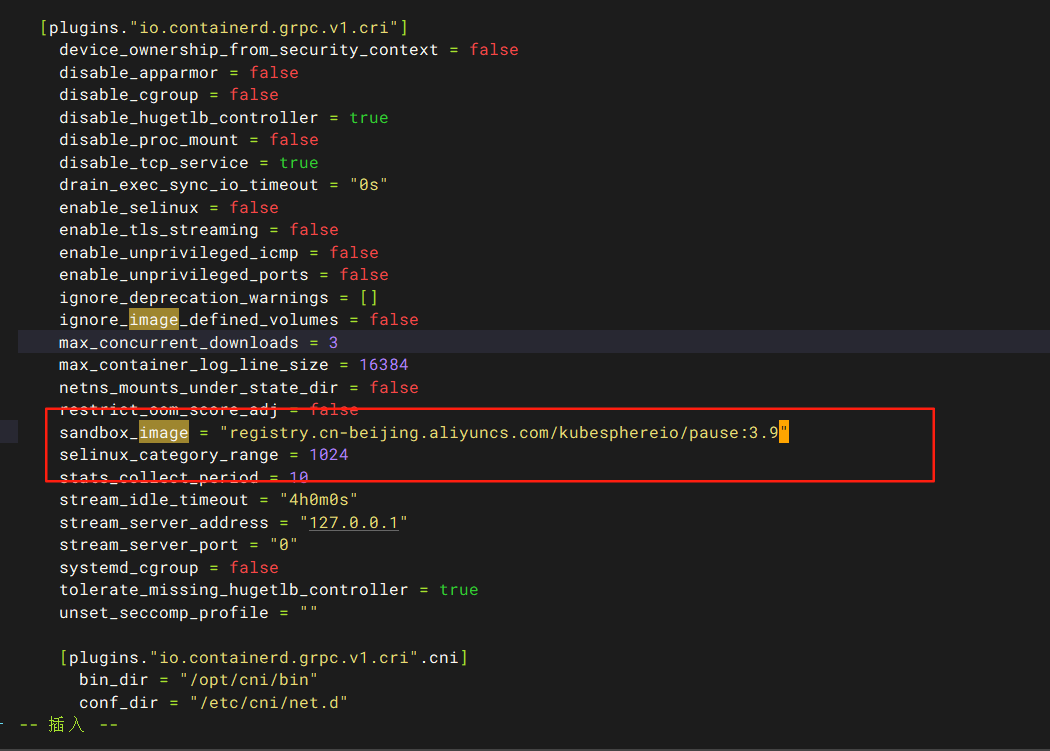

检查发现是这个是master的containerd调用的pause版本和实际的不一致,Node节点又正常。。

vim /etc/containerd/config.toml修改为实际镜像版本,后systemctl restart containerd

重启containerd并且删除集群再重新部署,操作看以下命令。废了九牛二虎之力,最终成功部署kubesphere。。虽然说kubekey部署kubesphere提供了极大的便利之处,但是也不是百分百顺利部署,还需要靠自己提升排障能力。

#重启containerd

systemctl restart containerd

#删除集群

./kk delete cluster -f ksp-k8s-v1334.yaml

#创建集群

./kk create cluster -f ksp-k8s-v1334.yaml

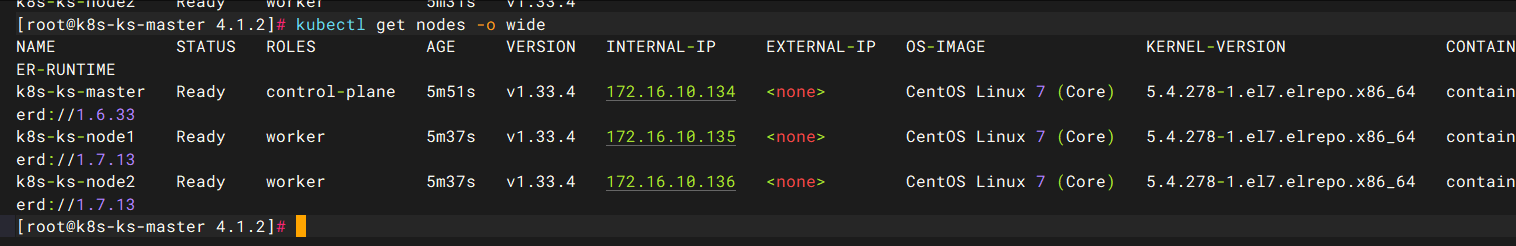

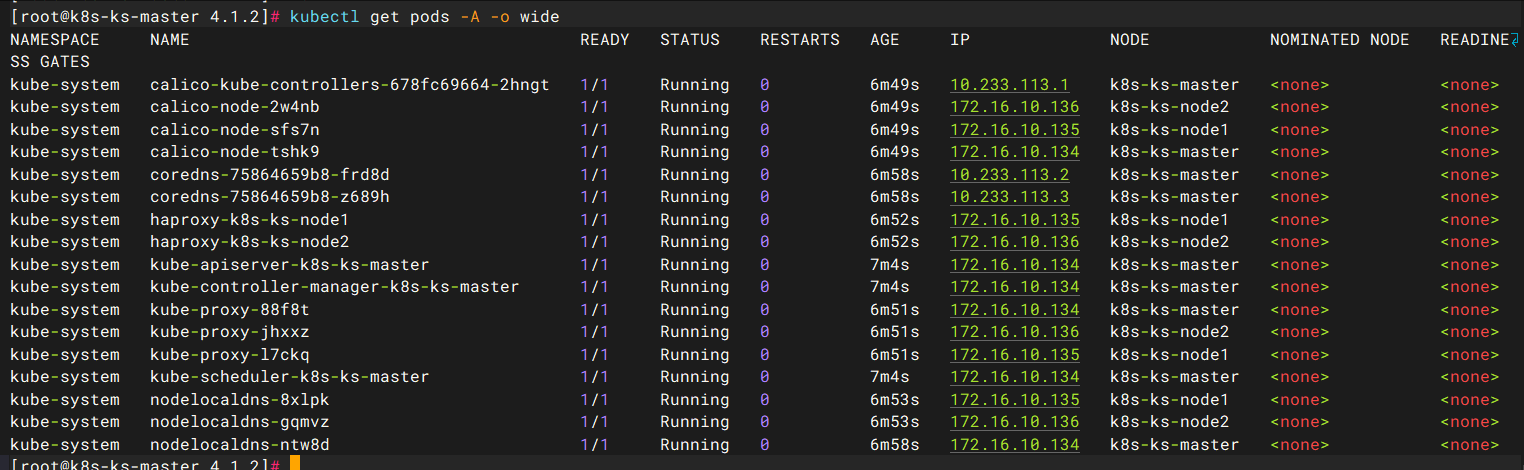

验证k8s集群,结果符合预期

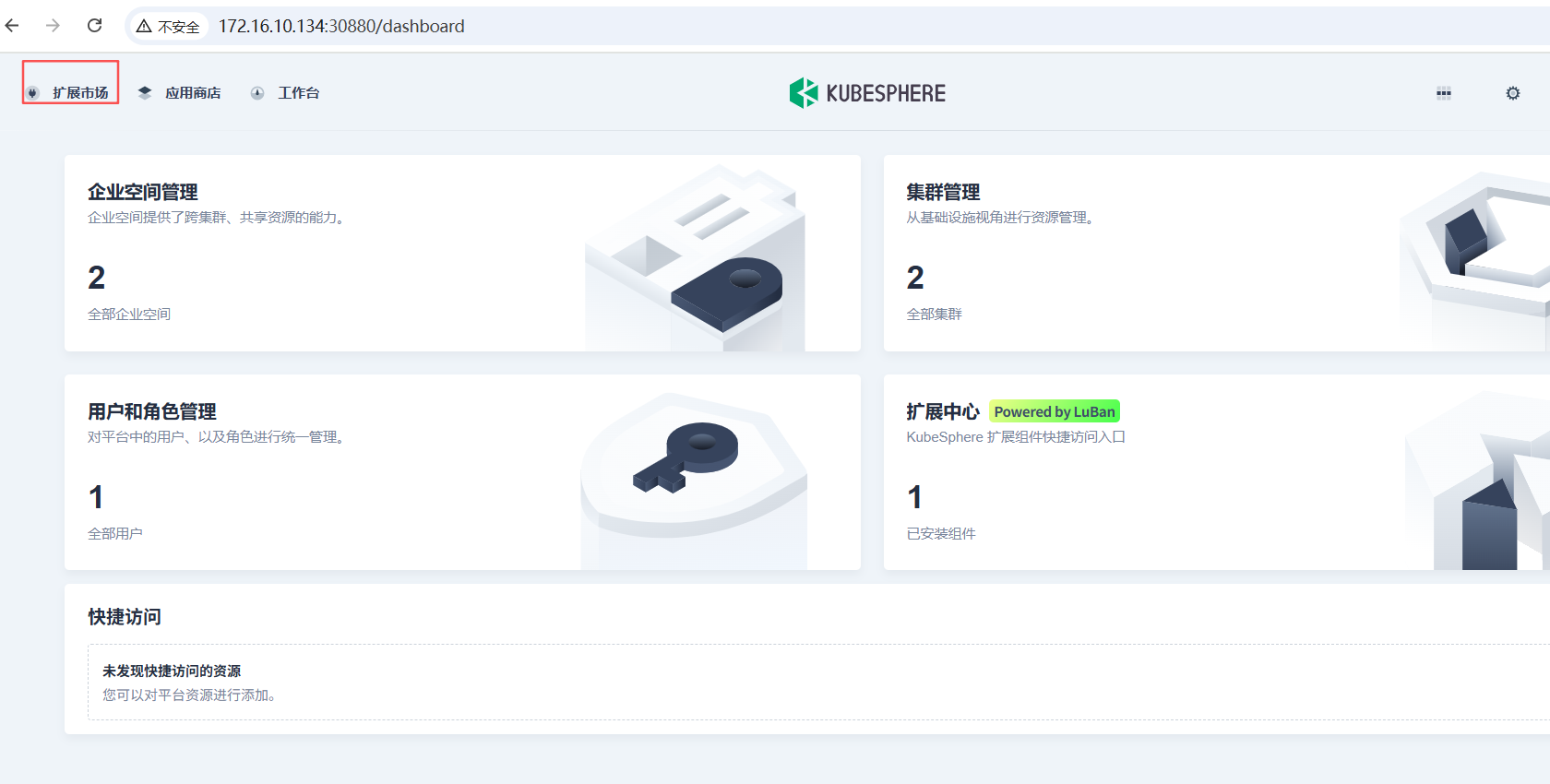

二、使用方法

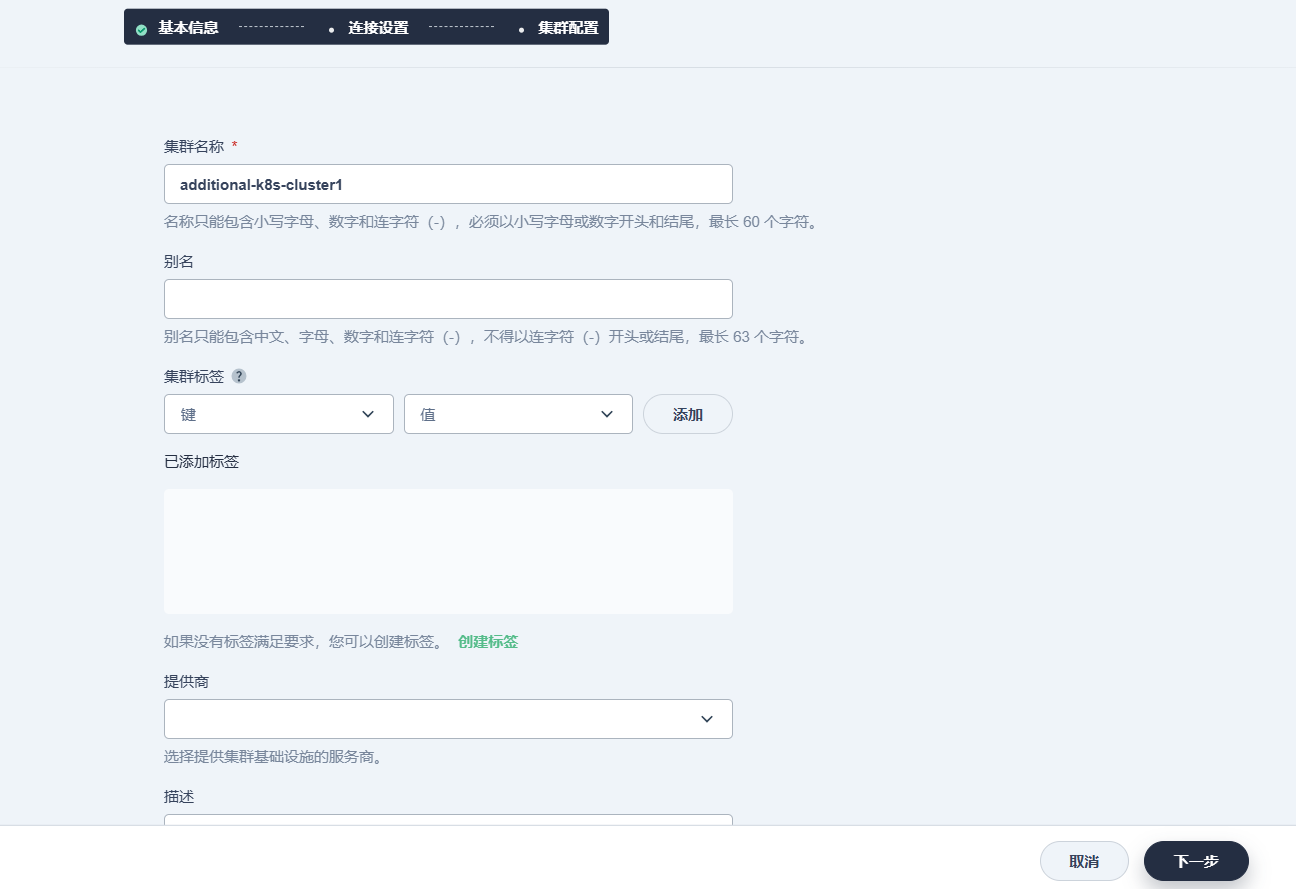

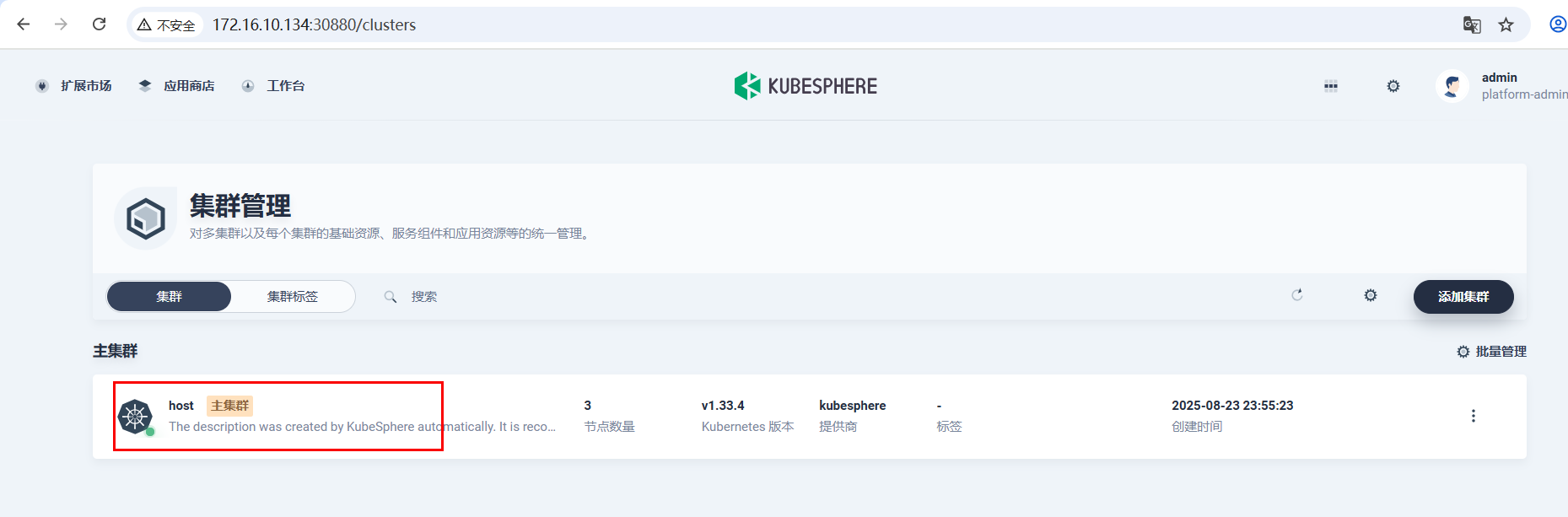

2.1 添加额外k8s集群

PS:需要提前拉取ks-agent镜像和extension镜像!!!ks4.1+版本添加集群信息,会自动在member集群添加agent,但由于添加的agent拉取的镜像地址仍然会是docker.io,国内已经无法访问,因此需要提前拉取。

k8s v1.23.x及以下版本基于docker环境

#worker节点拉取镜像并重打标签

docker pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-extensions-museum:latest

docker tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-extensions-museum:latest docker.io/kubesphere/ks-extensions-museum:latest

#master节点拉取镜像并重打标签

docker pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-apiserver:v4.1.2

docker tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-apiserver:v4.1.2 docker.io/kubesphere/ks-apiserver:v4.1.2

docker pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-controller-manager:v4.1.2

docker tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-controller-manager:v4.1.2 docker.io/kubesphere/ks-controller-manager:v4.1.2

k8s v1.24.x及以上版本基于container

#worker节点拉取镜像并重打标签

ctr -n k8s.io images pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-extensions-museum:latest

ctr -n k8s.io images tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-extensions-museum:latest docker.io/kubesphere/ks-extensions-museum:latest

#master节点拉取镜像并重打标签

ctr -n k8s.io images pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-apiserver:v4.1.2

ctr -n k8s.io images tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-apiserver:v4.1.2 docker.io/kubesphere/ks-apiserver:v4.1.2

ctr -n k8s.io images pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-controller-manager:v4.1.2

ctr -n k8s.io images tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/kubesphere/ks-controller-manager:v4.1.2 docker.io/kubesphere/ks-controller-manager:v4.1.2

基于以上环境拉取对应的镜像,就可以添加新集群了

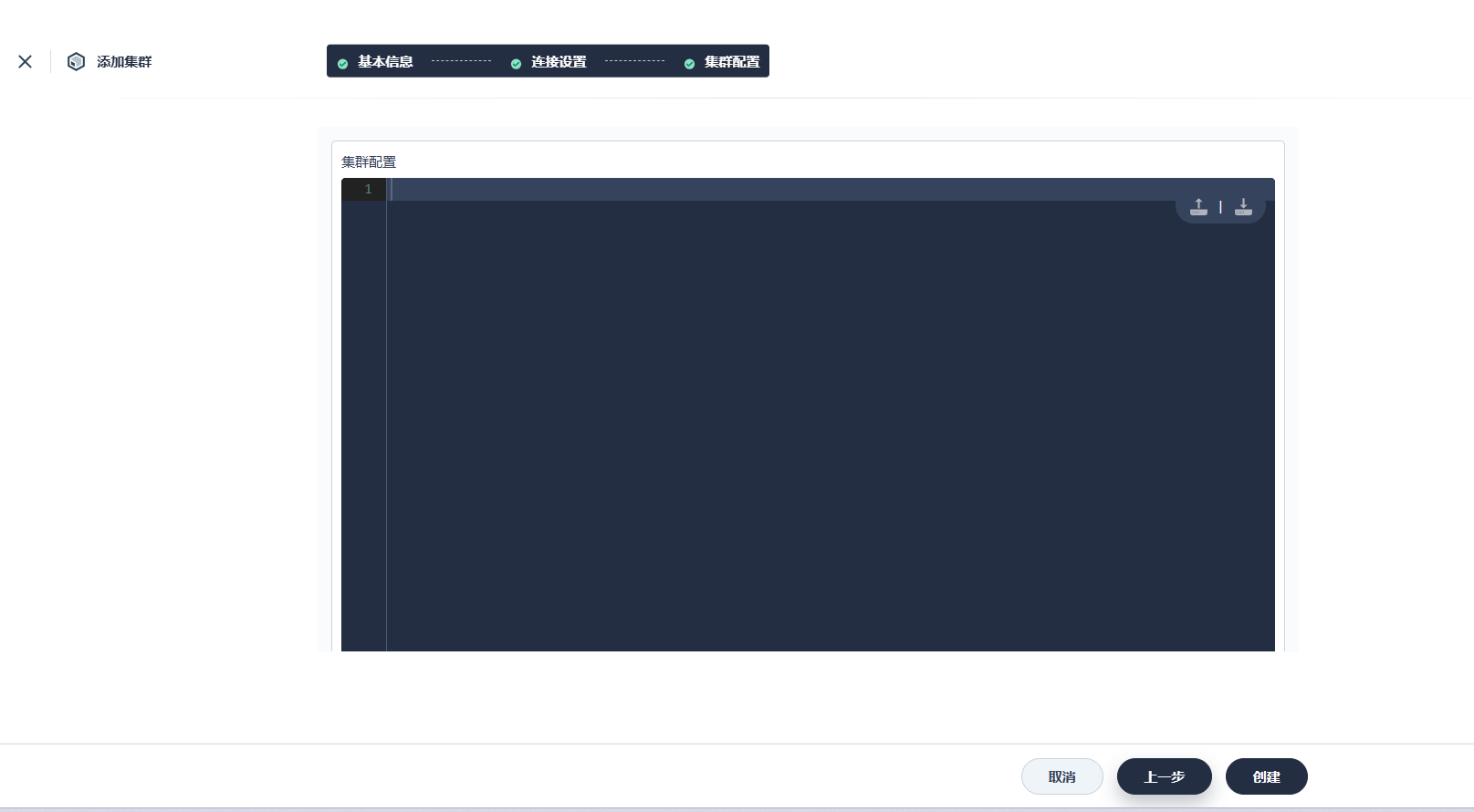

只填集群名称,唯一标识。后续需要改别名,标签也可再次修改

kubeconfig可以在cat $HOME/.kube/config获取

集群配置可以忽略

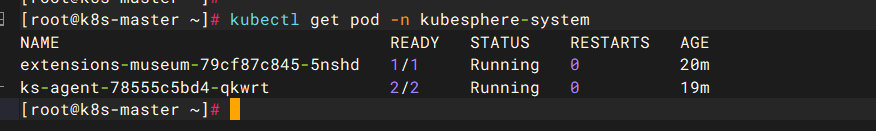

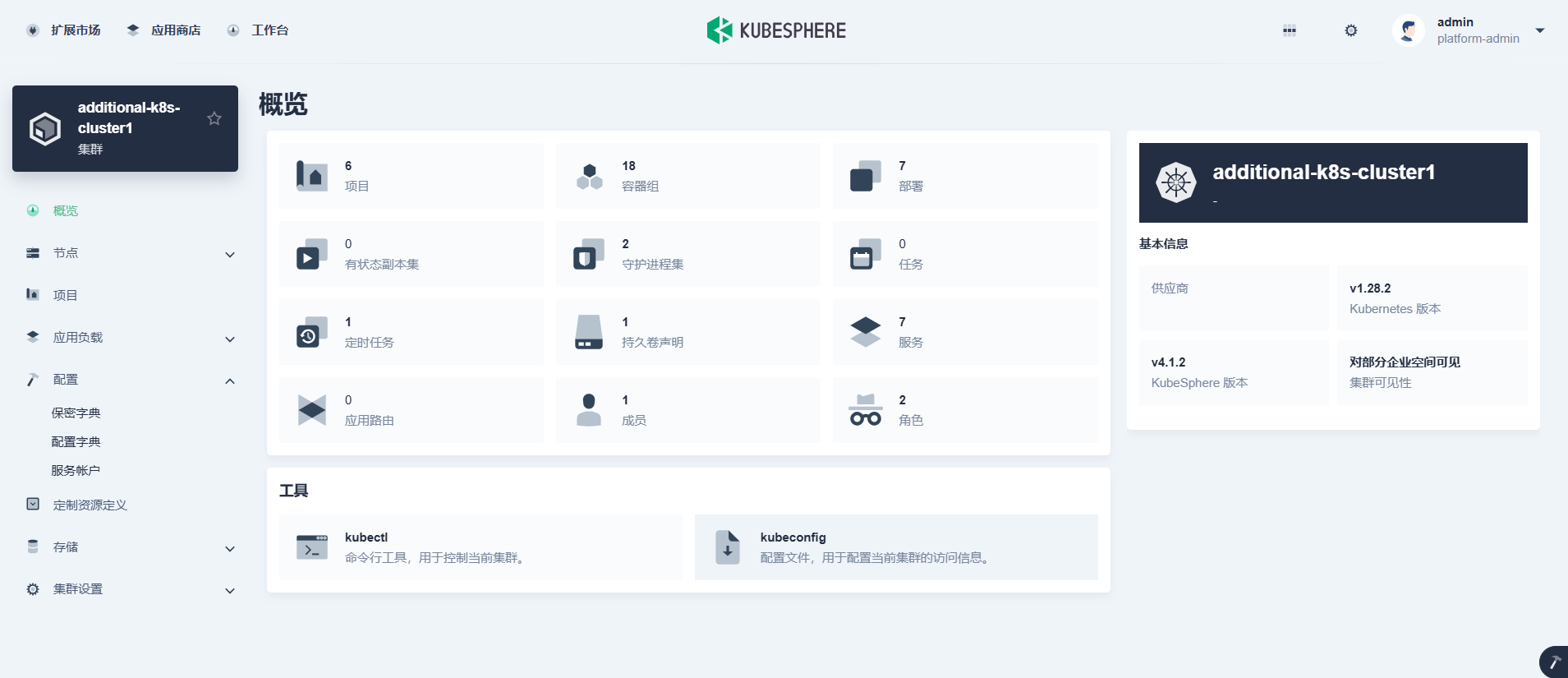

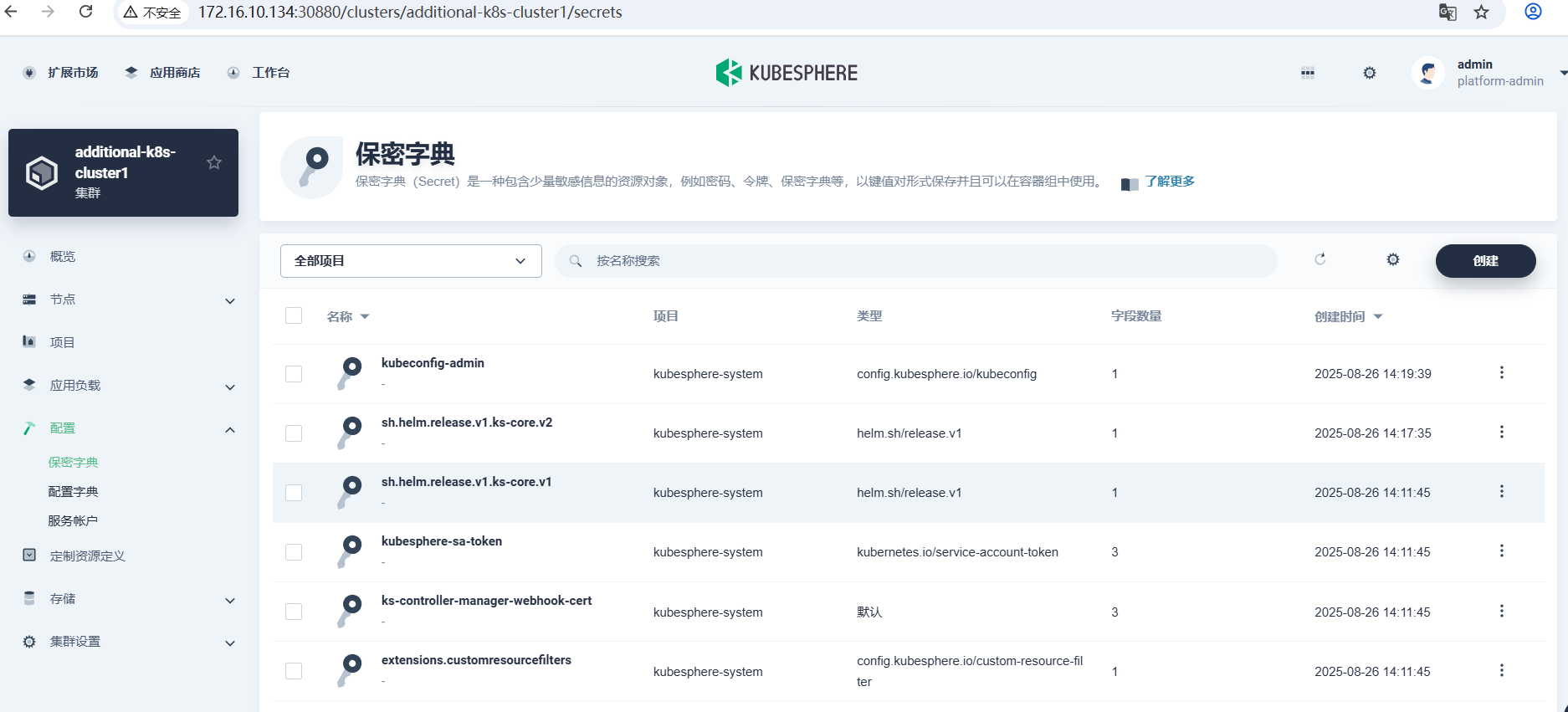

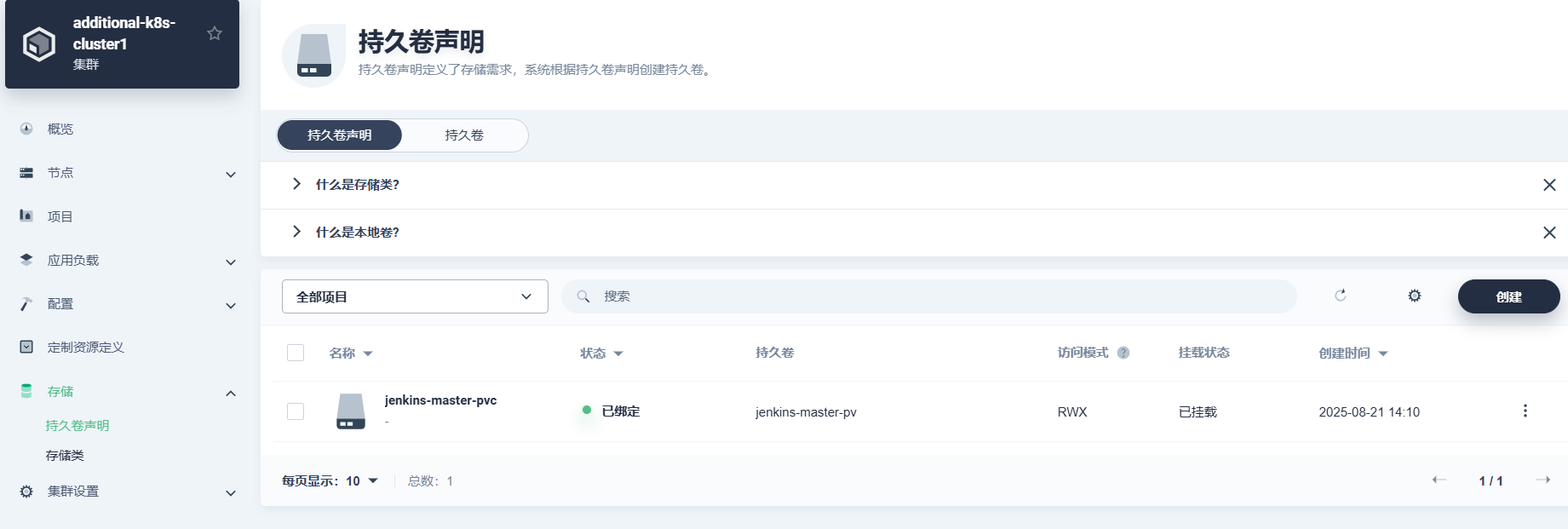

创建后,查看额外集群的agent运行情况和集群信息

deployment,cm、secret,pvc等信息

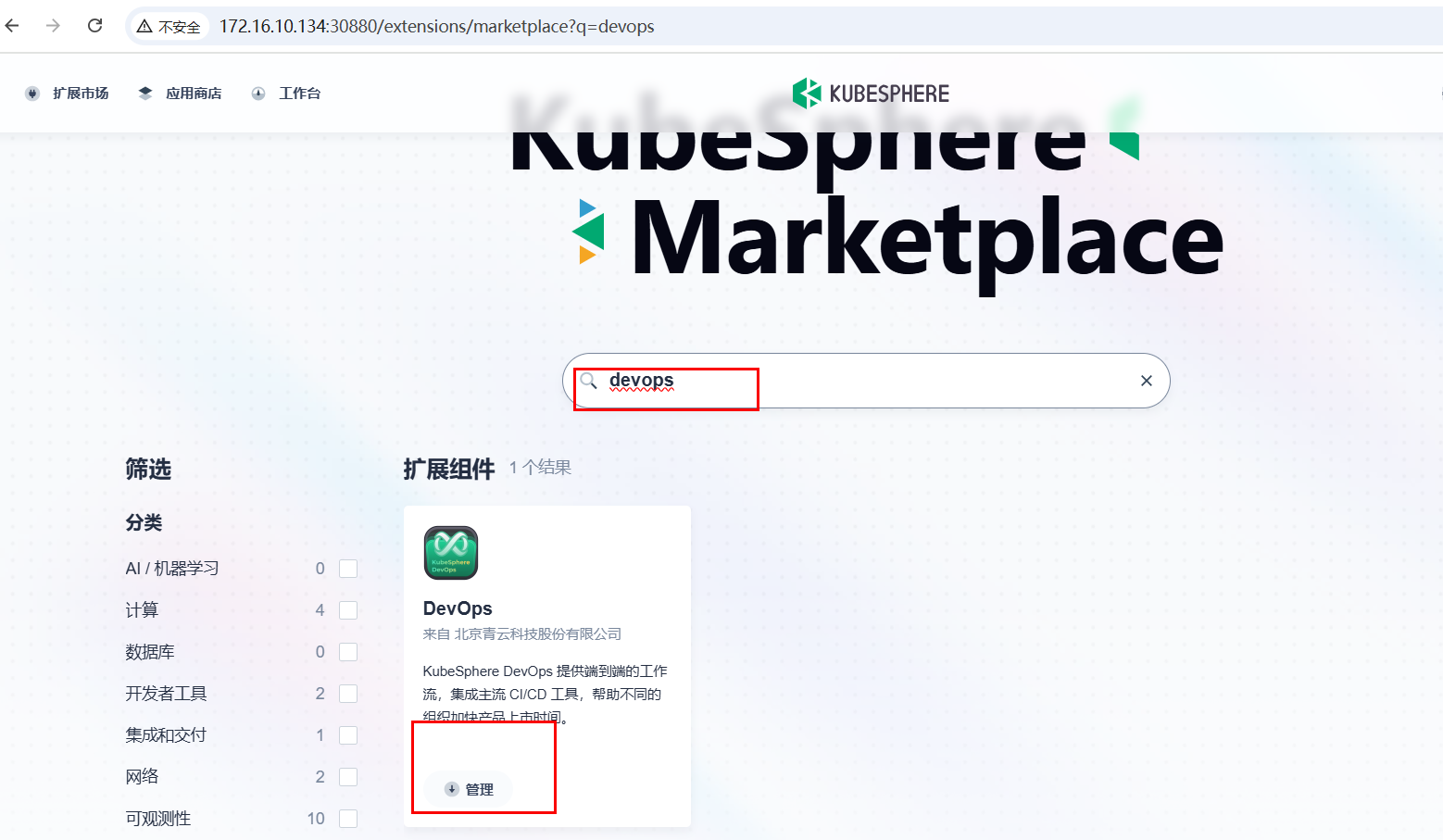

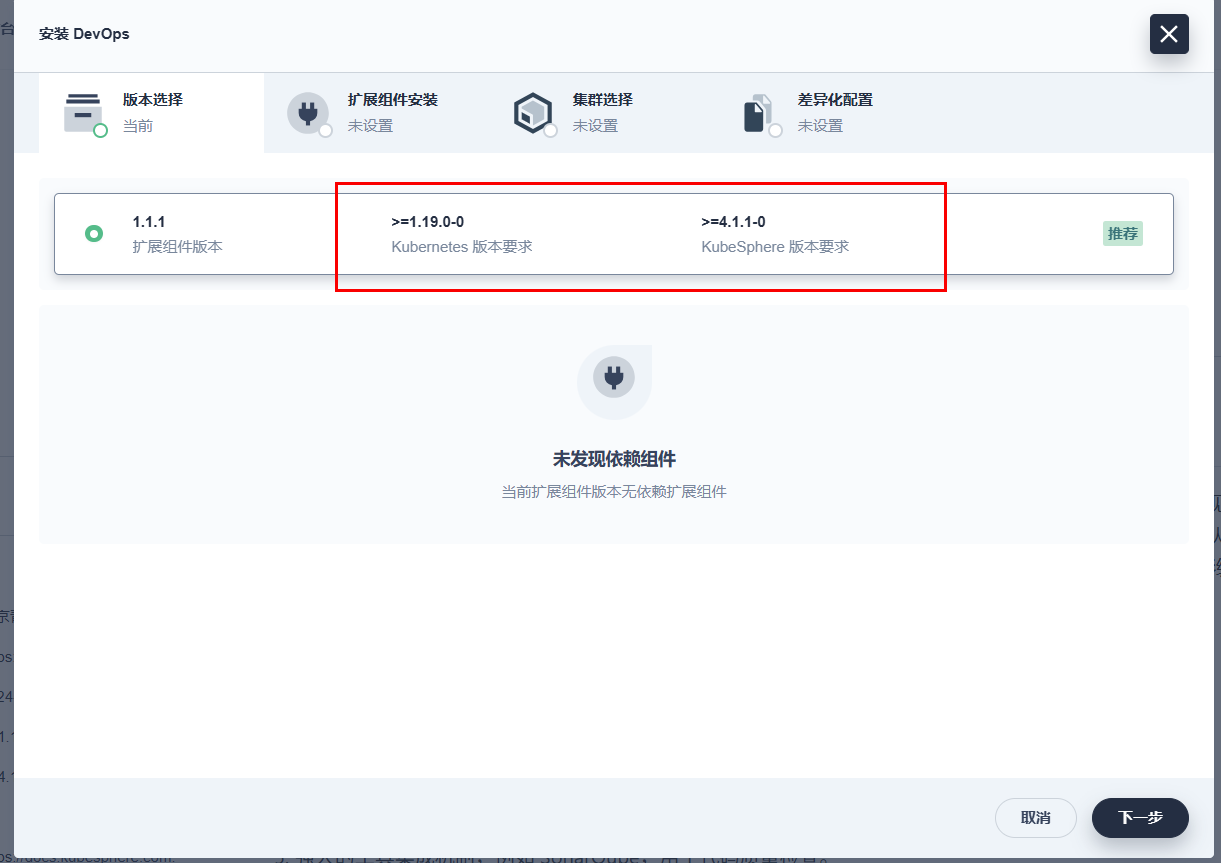

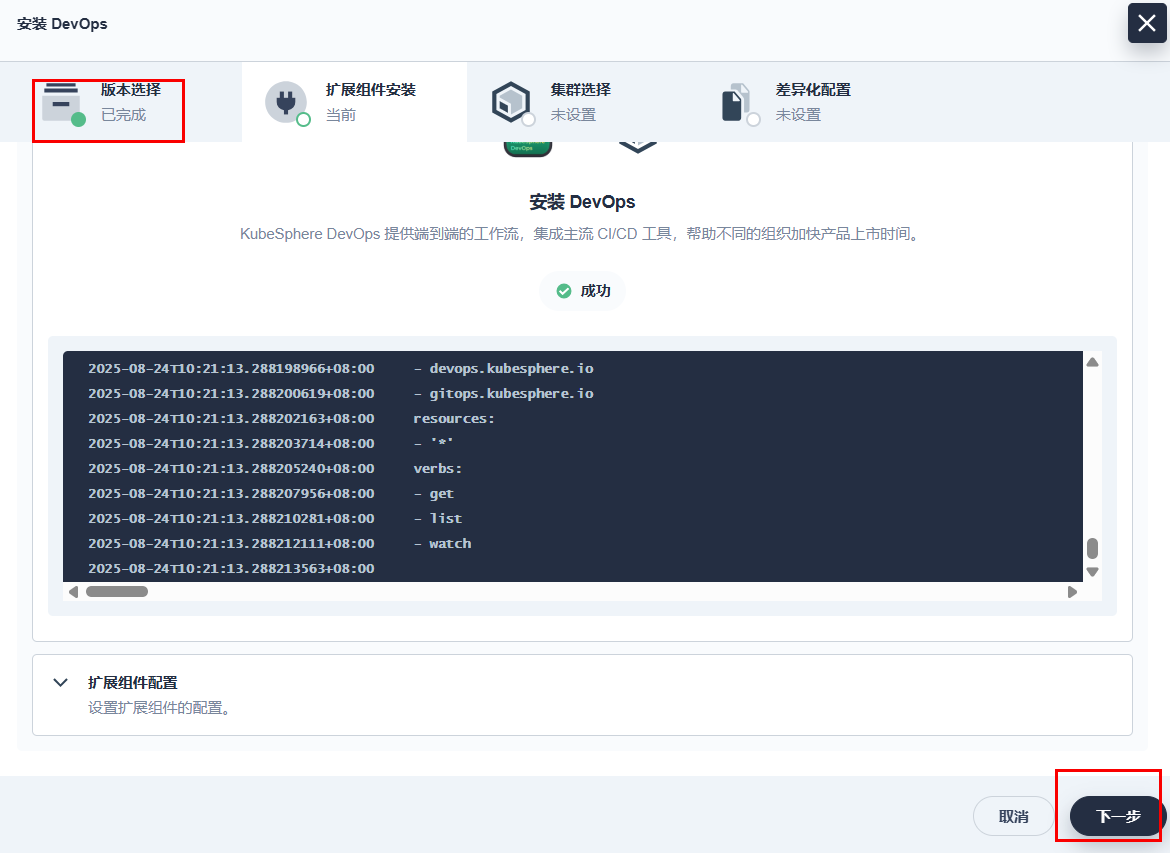

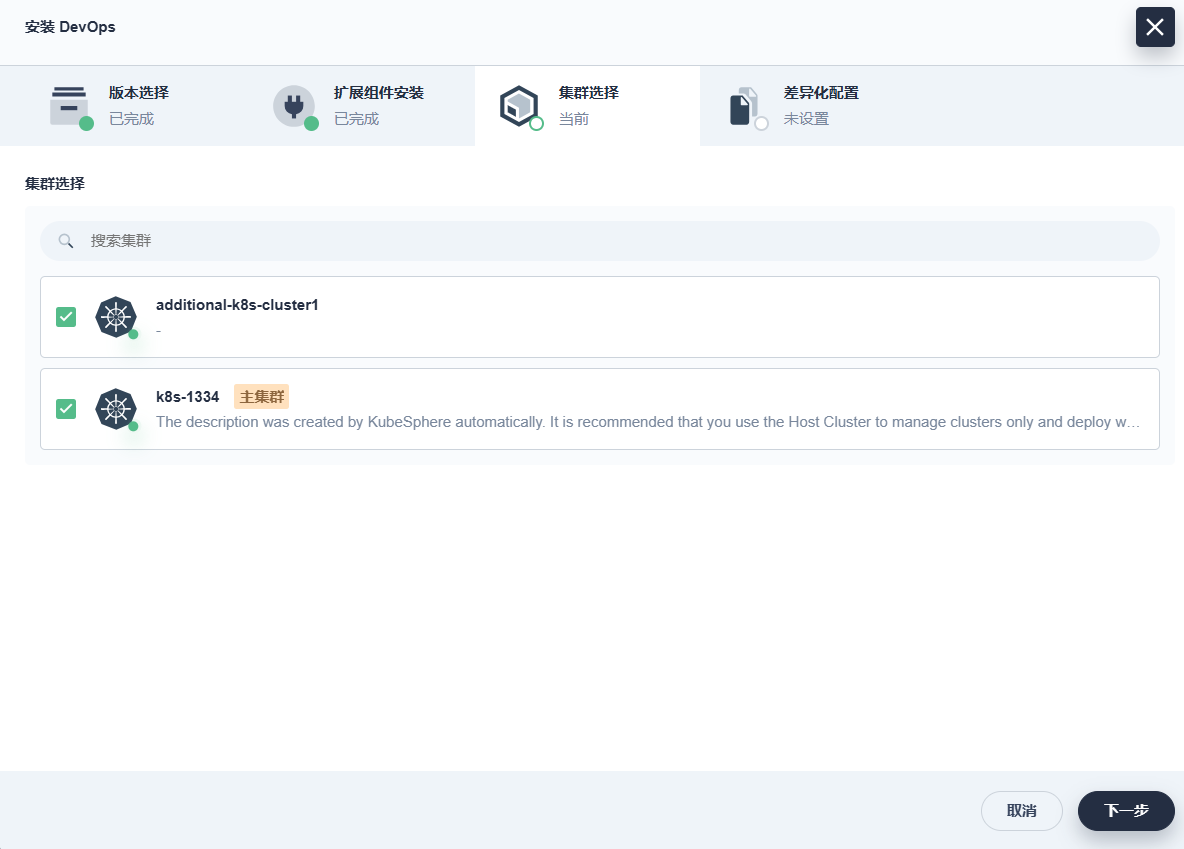

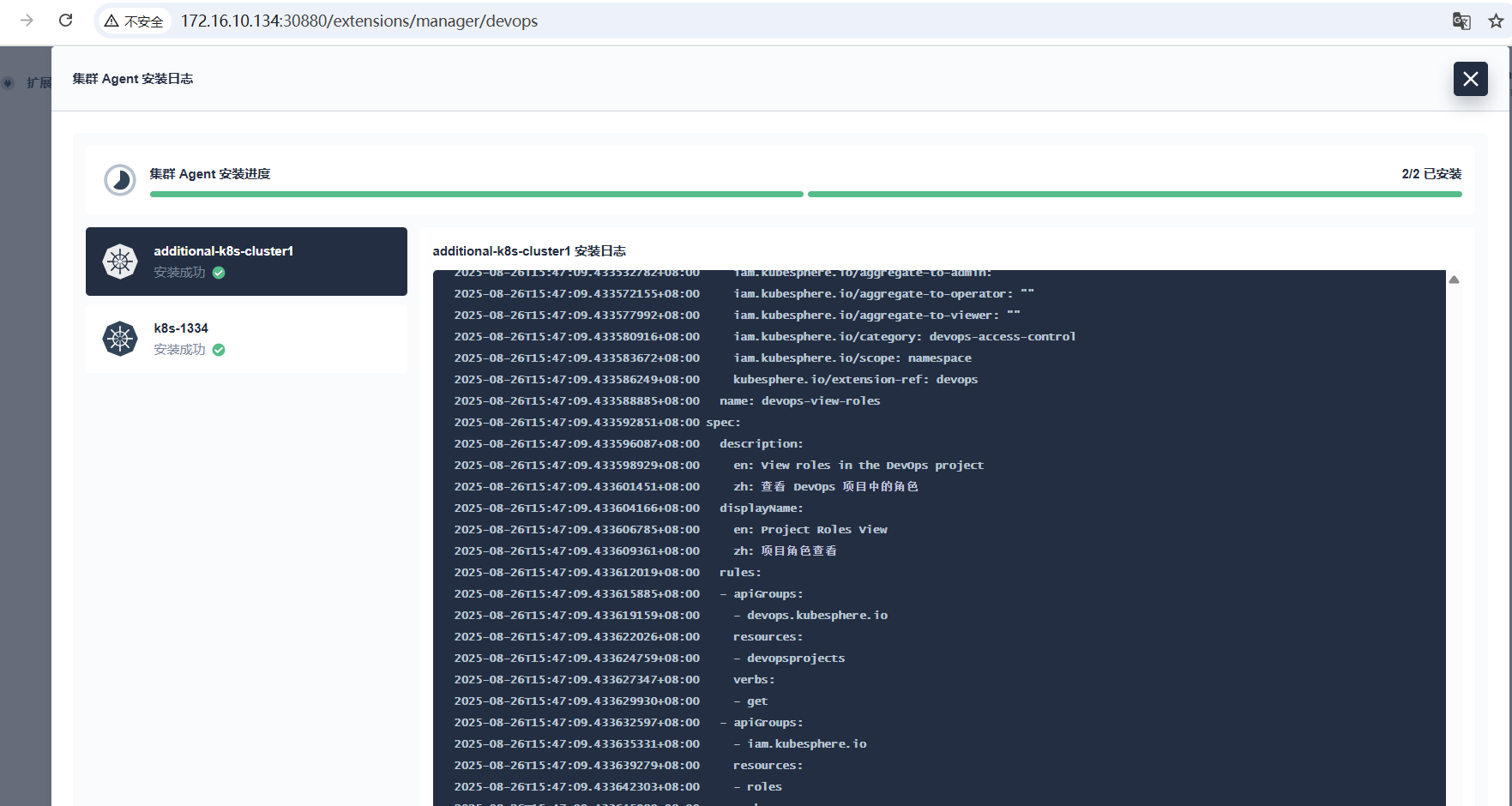

2.2 安装流水线拓展

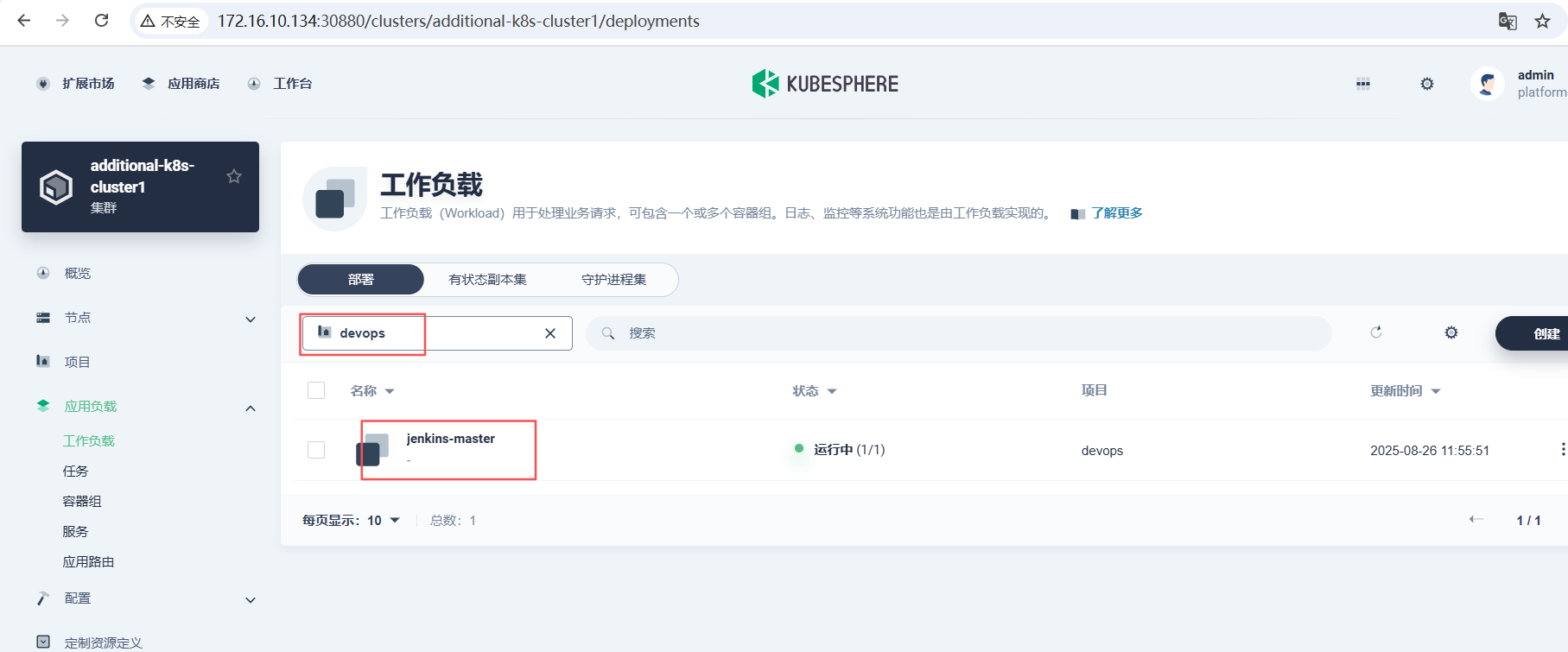

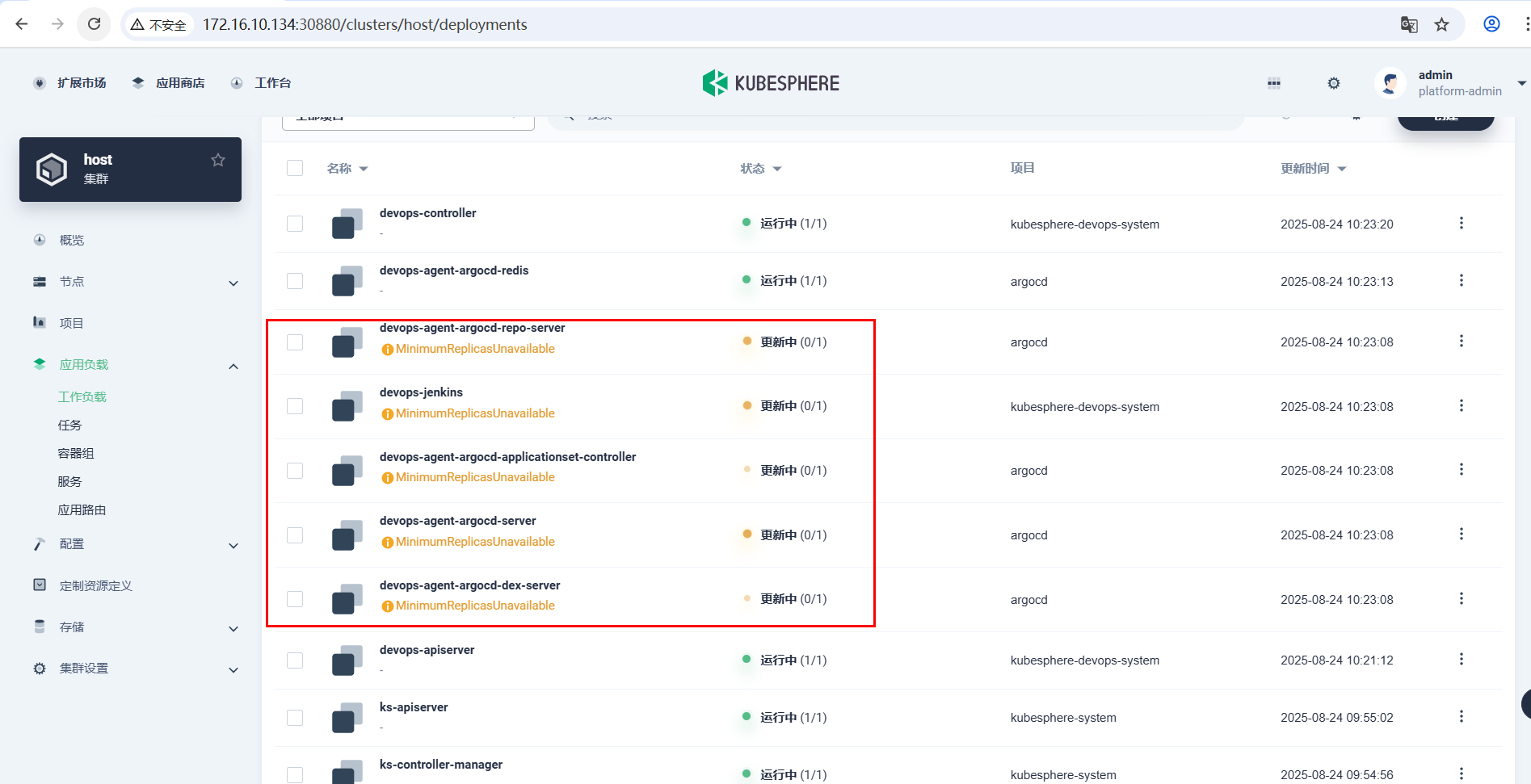

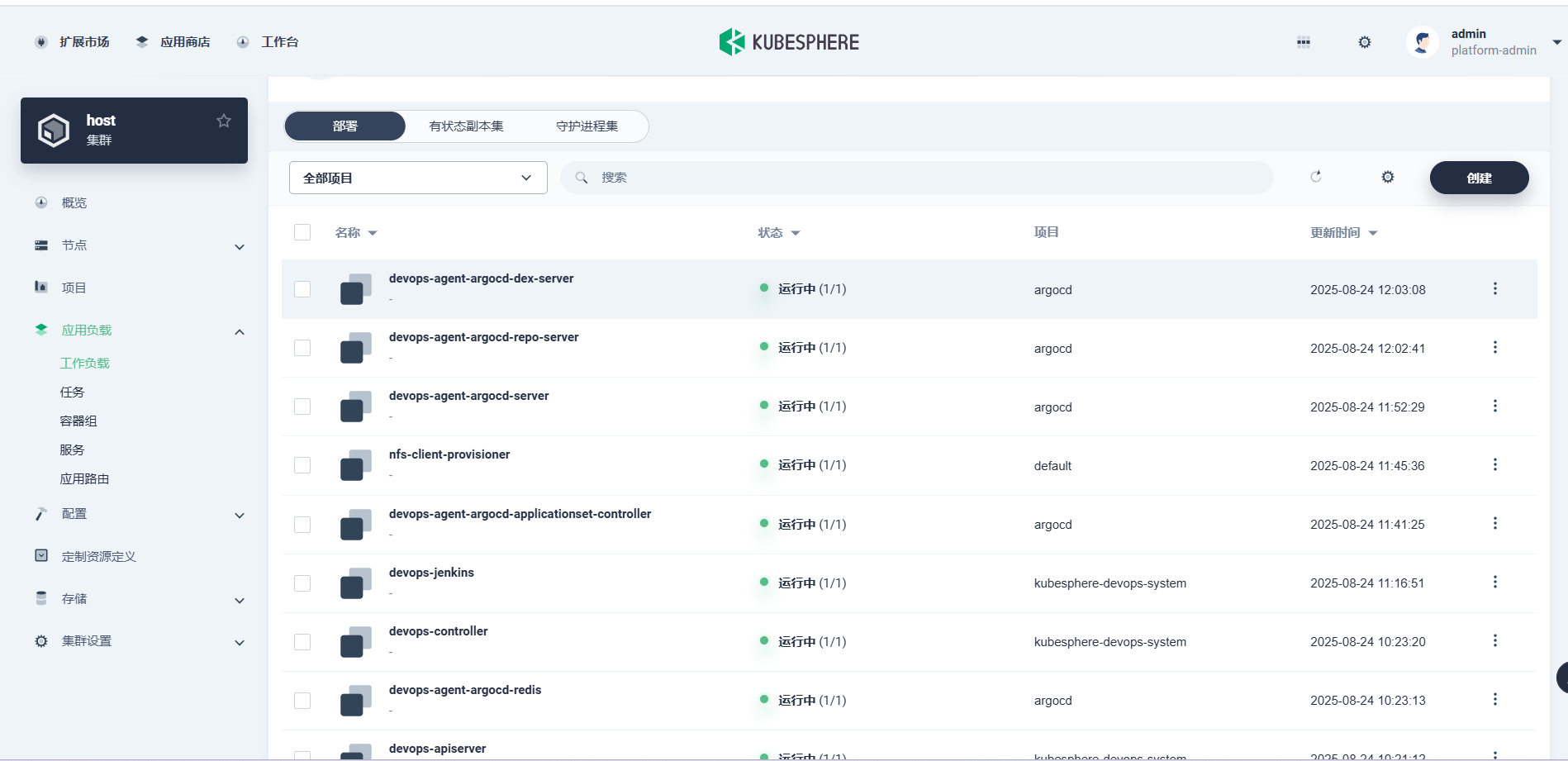

查看jenkins和argocd的部署情况

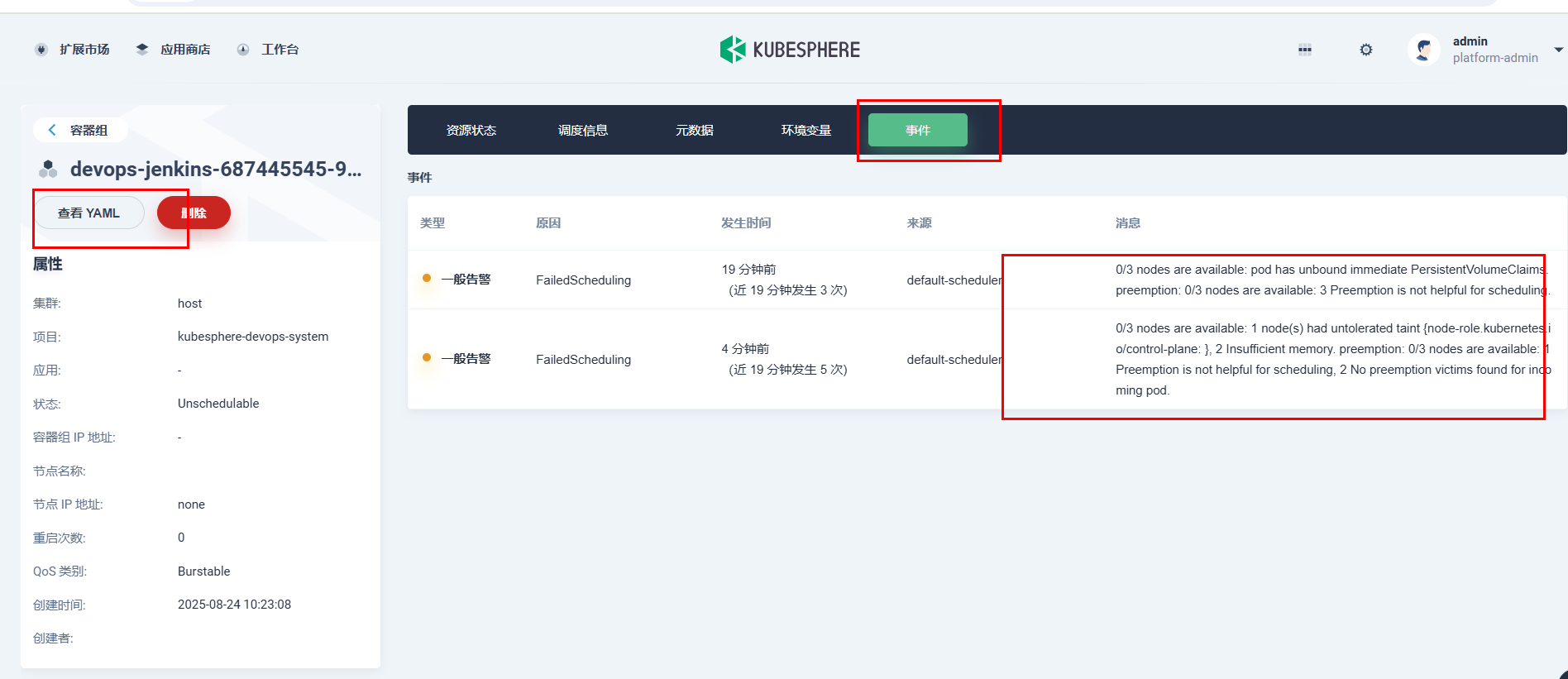

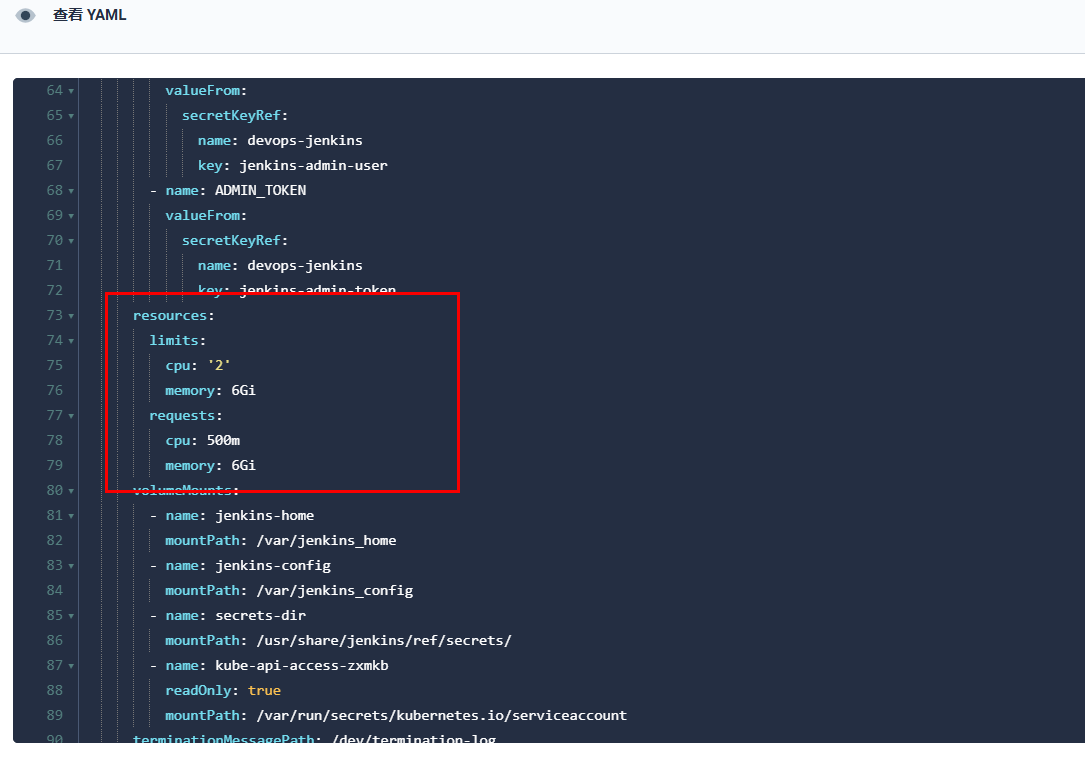

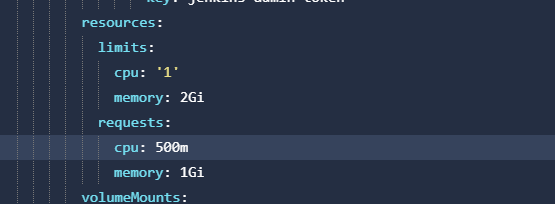

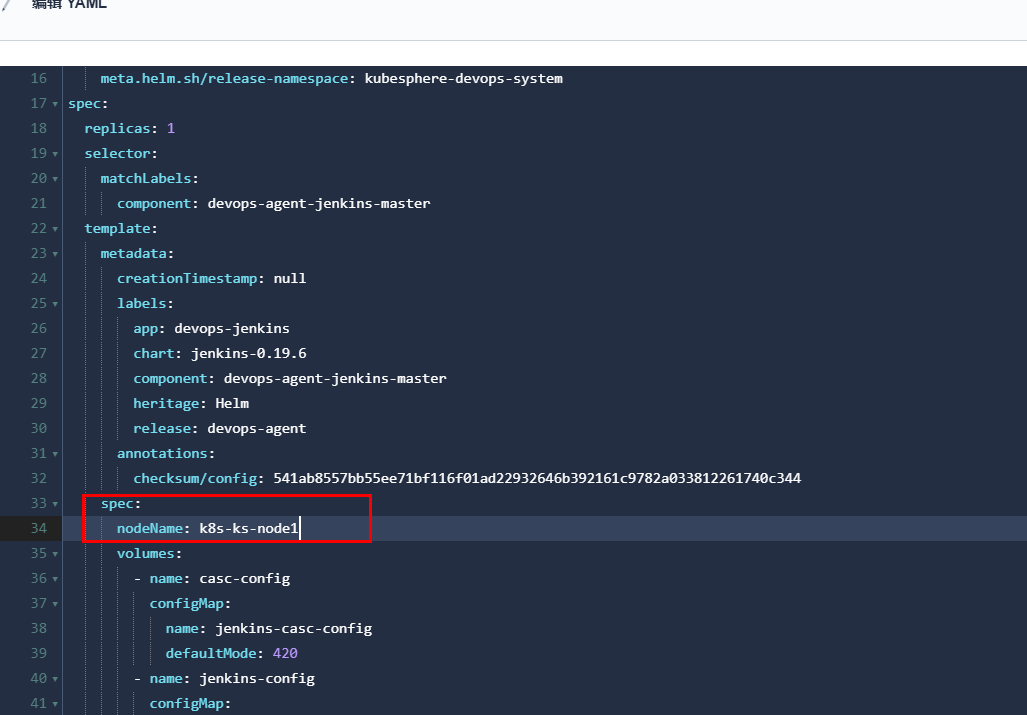

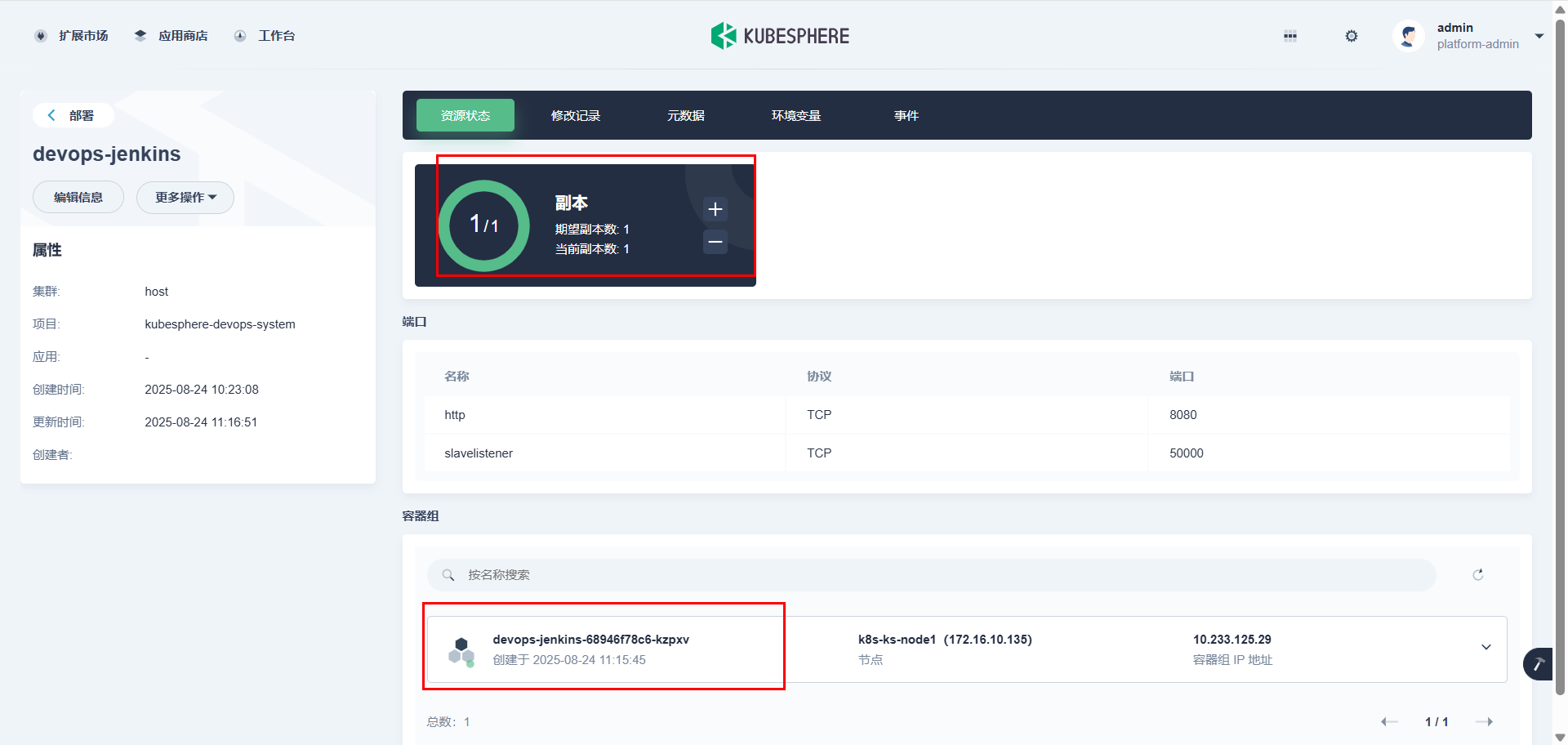

但观察一段时间后,发现未能正常启动,可以查看事件。发现存在master节点污点无法调度,两个node内存不足。可以从内存下手,查看默认的yaml的资源配额。

jenkins运行最小配额为1c2G,随即调整

再指定运行node上,资源相对充足。仅测试使用,实际资源充足,配额方面均可忽略

最后还是失败告终。。。请求最低还是要6G,估计是定制化程度比较高。只能临时加大虚拟机的内存,保持原来的资源配额继续运行,最后还是可以成功运行了。

还要补充一些额外镜像,否则部分agent端Pod拉取不到镜像

#因插件与预设的镜像地址命名空间可能出现误差,需要手动拉取对应的流水线agent镜像

ctr -n k8s.io image pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/quay.io/argoproj/argocd-applicationset:v0.4.1

ctr -n k8s.io images pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/quay.io/argoproj/argocd:v2.3.3

ctr -n k8s.io images pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/ghcr.io/dexidp/dex:v2.30.2

#修改标签

ctr -n k8s.io image tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/quay.io/argoproj/argocd-applicationset:v0.4.1 swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/argoproj/argocd-applicationset:v0.4.1

ctr -n k8s.io image tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/quay.io/argoproj/argocd:v2.3.3 swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/argoproj/argocd:v2.3.3

ctr -n k8s.io image tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/ghcr.io/dexidp/dex:v2.30.2 swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/dexidp/dex:v2.30.2

最终流水线插件部署所需流程已结束

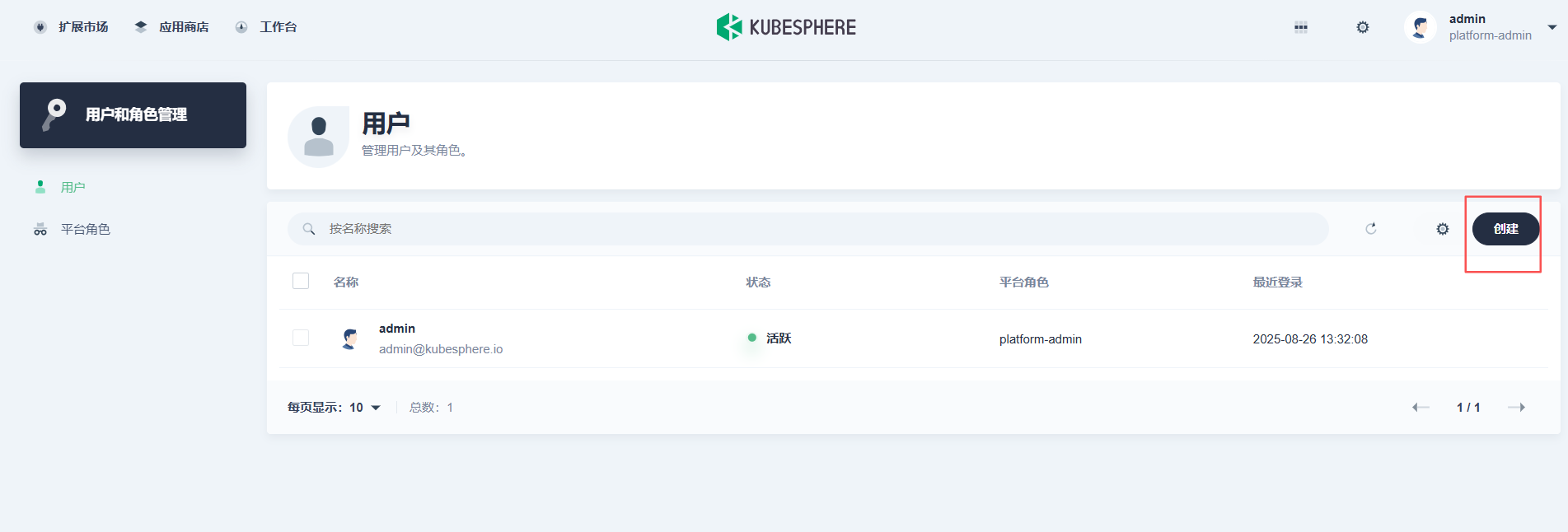

2.3 账号、角色

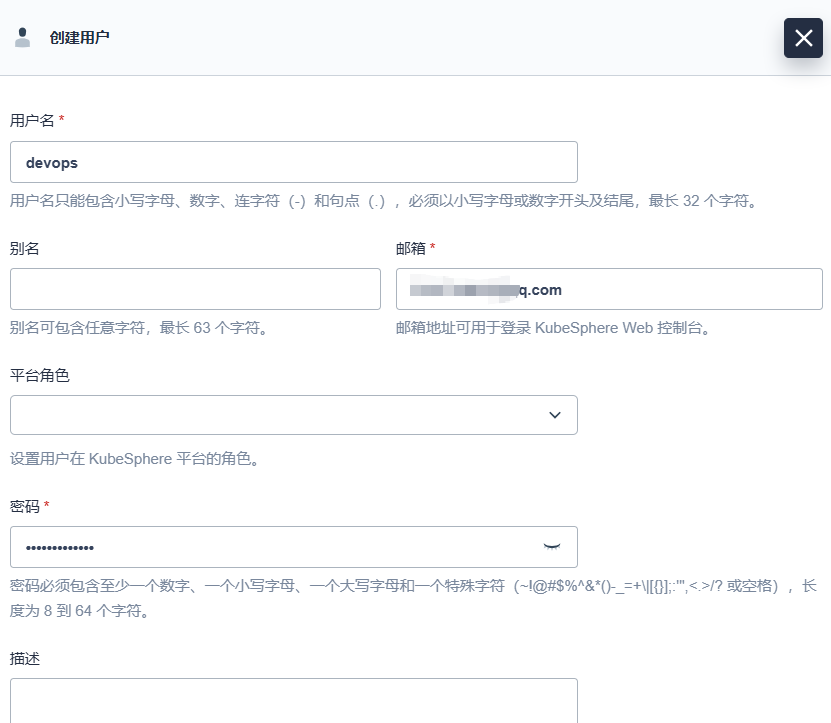

新建一个账号,用于演示2.4章节的流水线

新建一个devops账号,邮箱和密码(须符合密码强度要求)

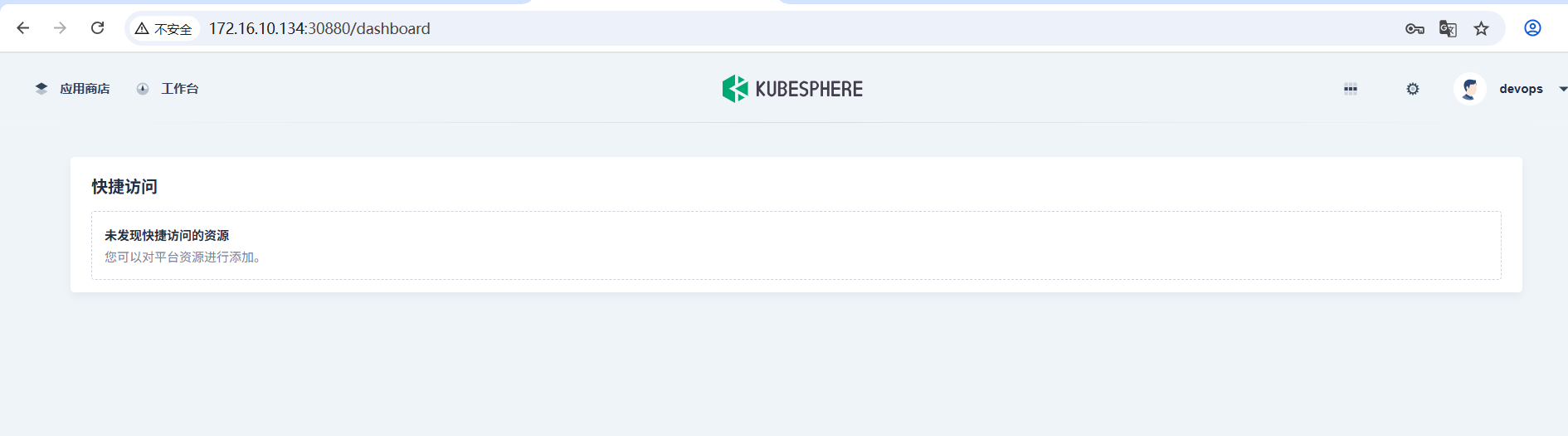

登录后暂无访问资源,可以继续往下2.4章节的2.4.2小节

2.4 流水线项目演示

2.4.1 创建企业空间

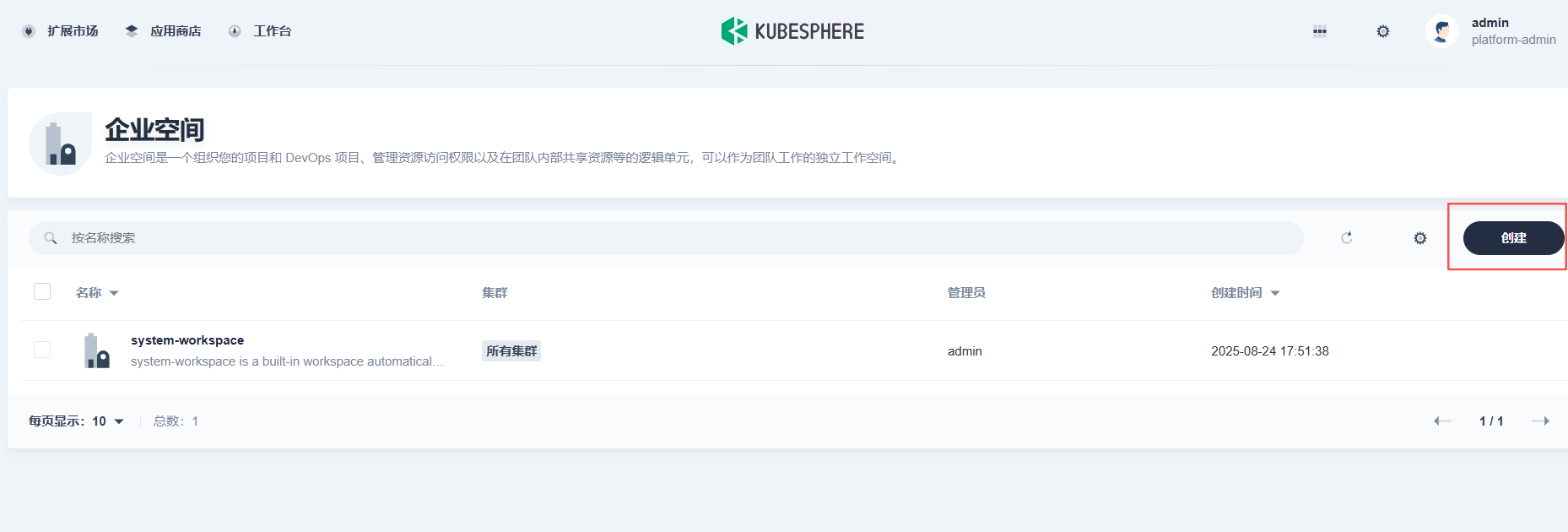

单独创建企业空间,原因是默认的system-workspace是不支持创建流水线的,更多是管理集群、角色、用户权限,如下图。

如下是创建企业空间流程

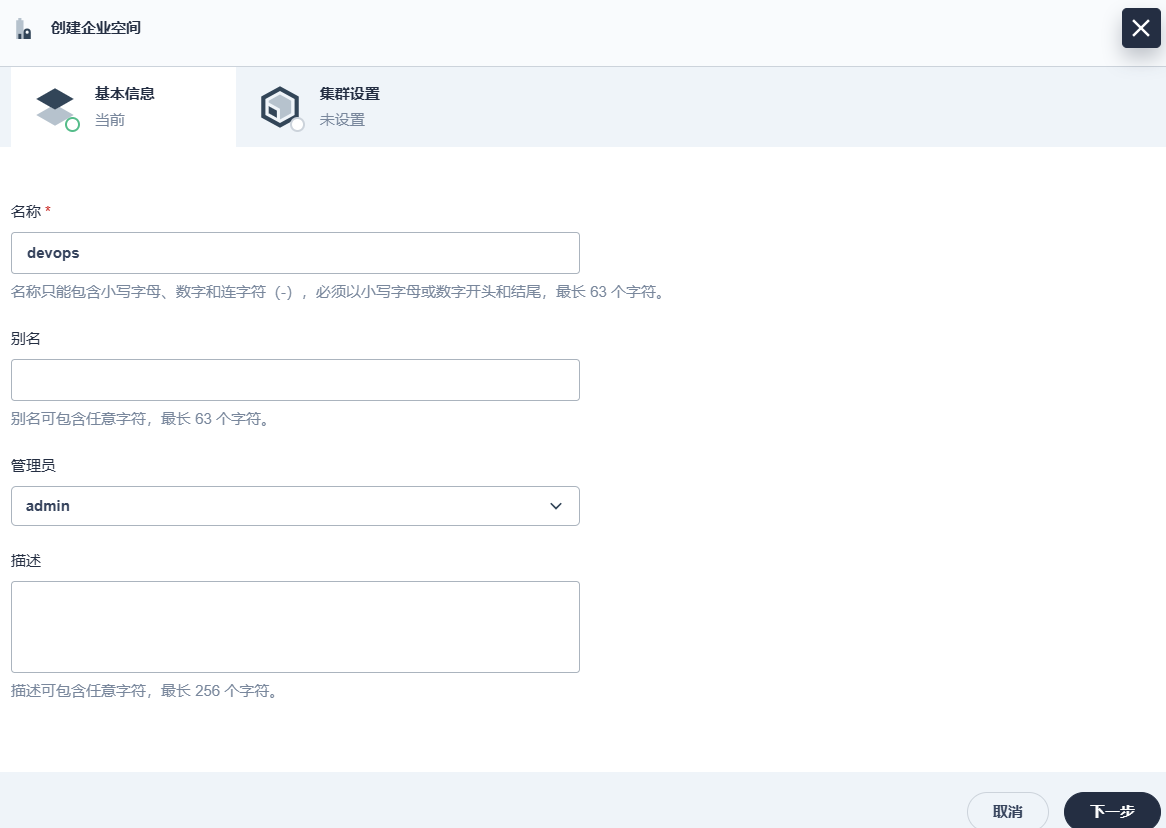

主要是名称,别名和描述可自定义,非必要字段

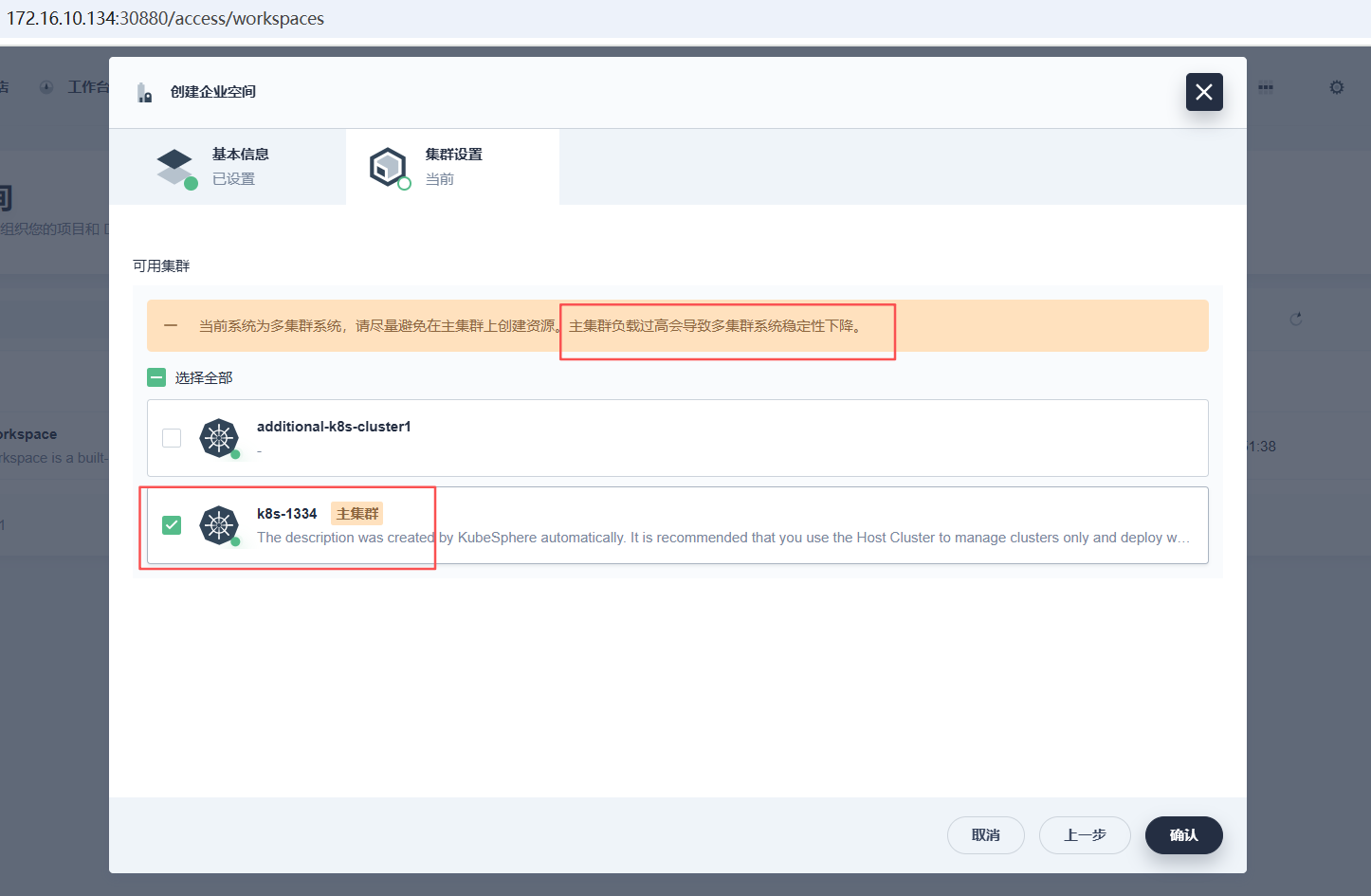

仅演示,选了资源较充足的主集群,生产环境尽量避免!!

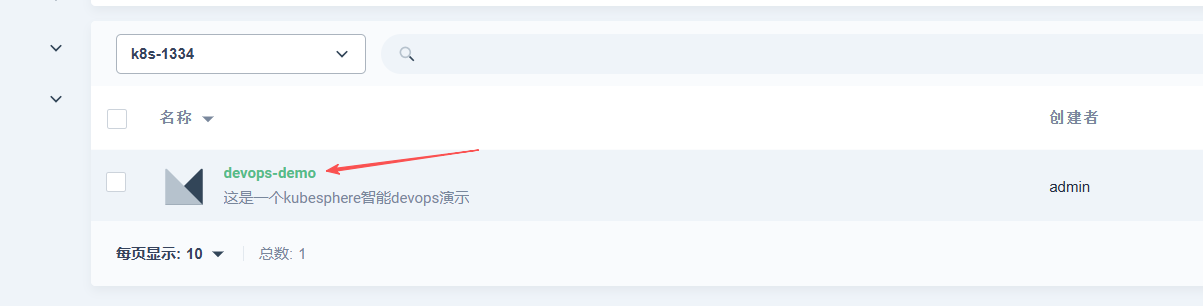

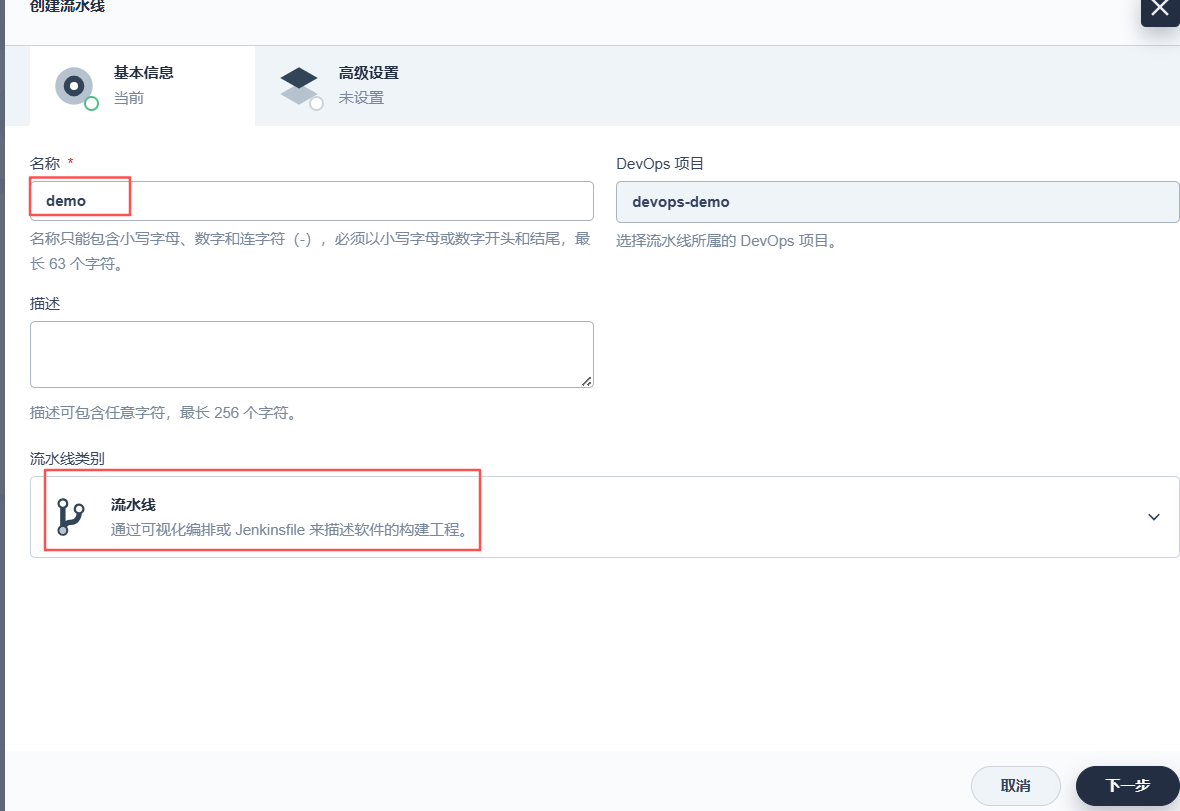

2.4.2 创建简单的流水线实例

创建名称,集群选择

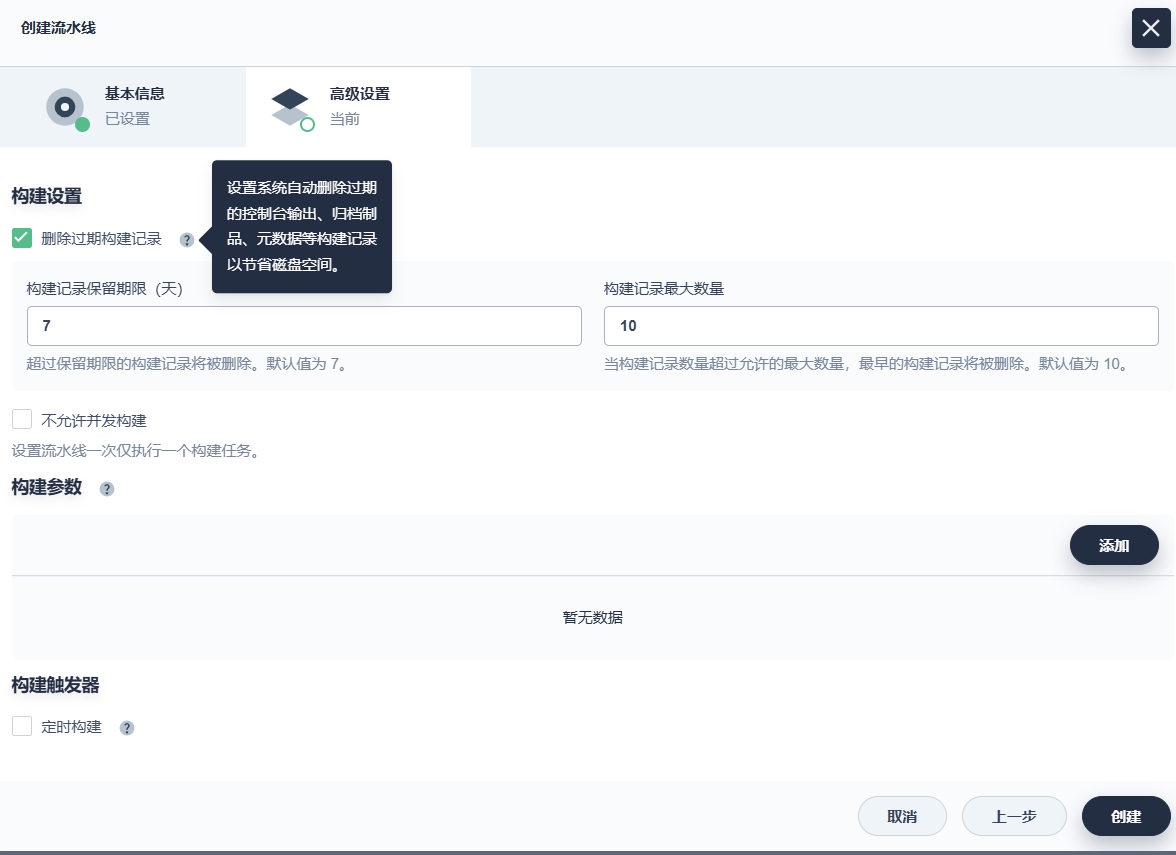

设置可默认

随后生成一条流水线工程

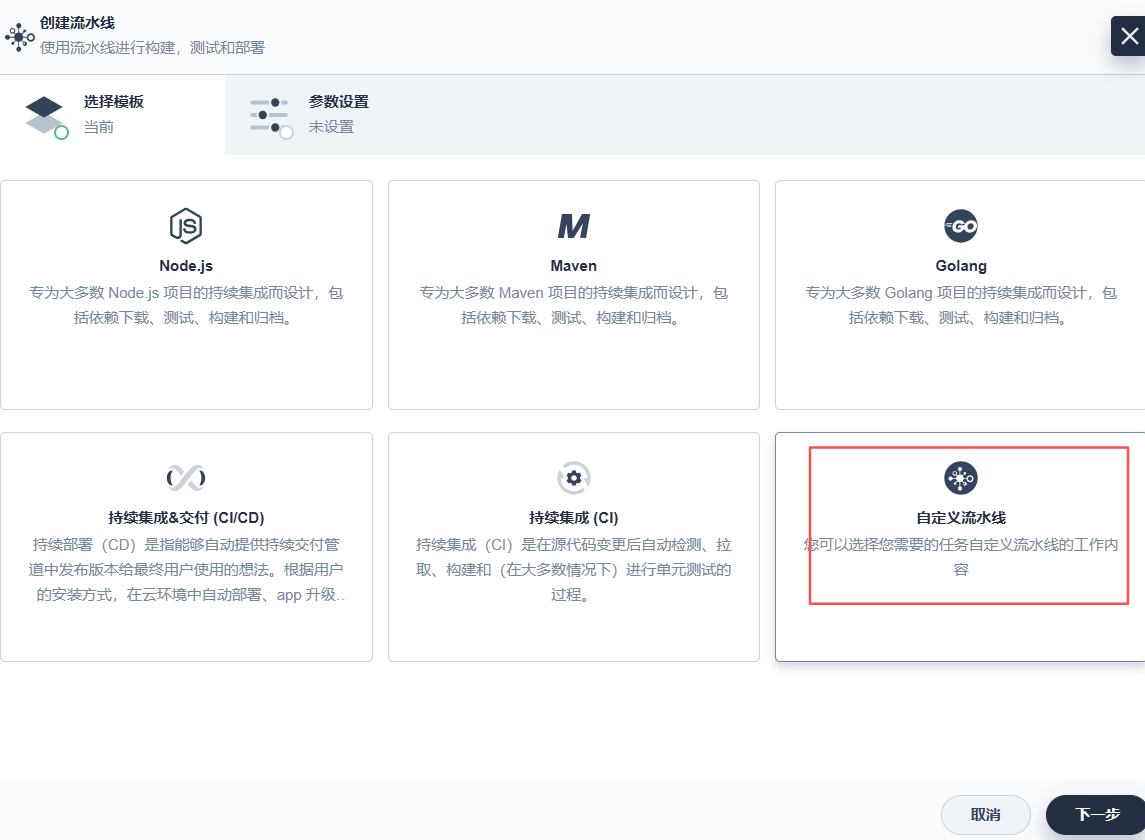

配置Jenkinsfile,可提前编写Jenkinsfile,亦可通过面板可视化编辑

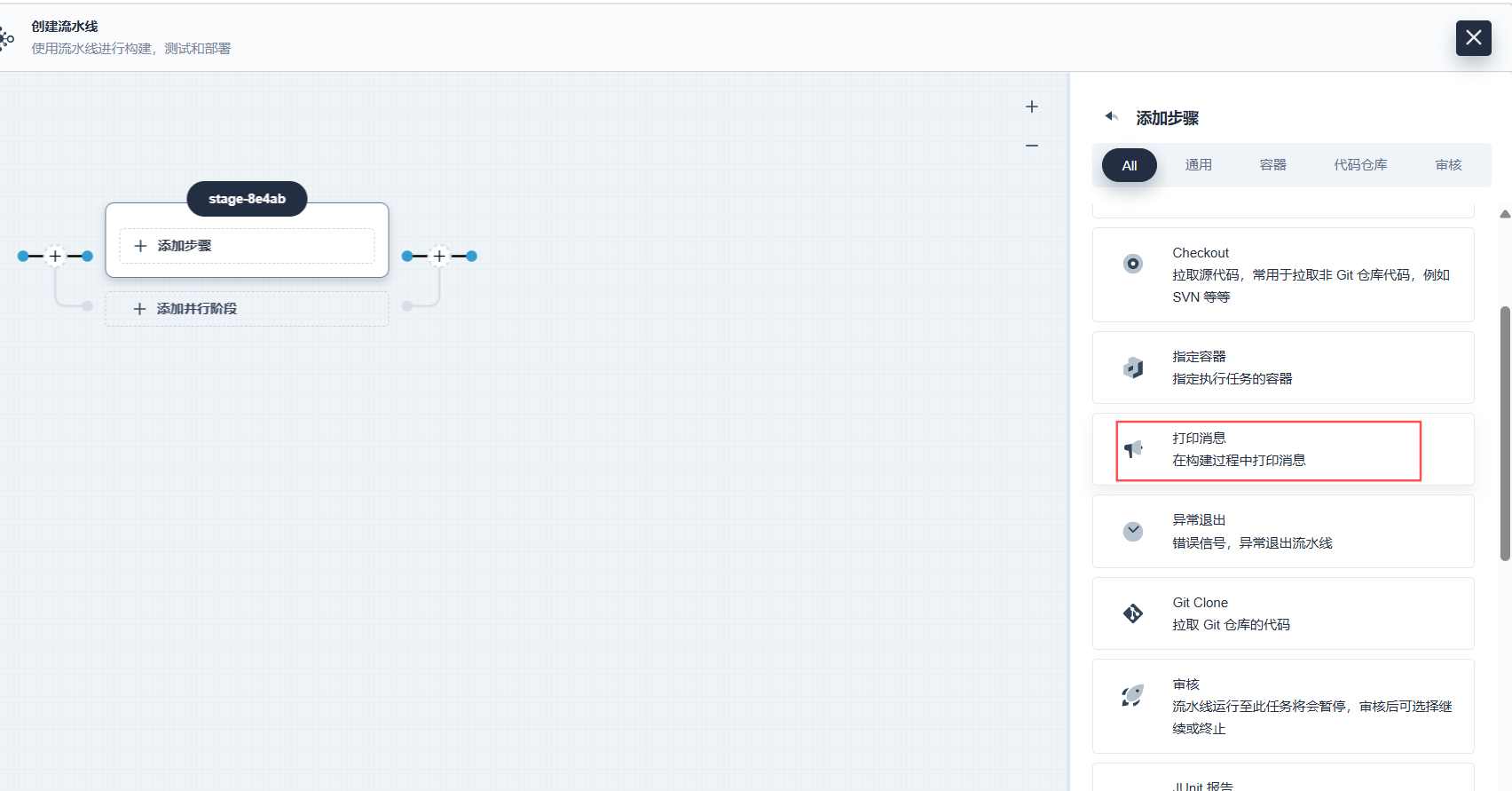

这里先演示可视化编辑

先创建一个自定义流水线,不用额外拉取镜像等操作,其余均需要拉取镜像作为底层容器构建操作

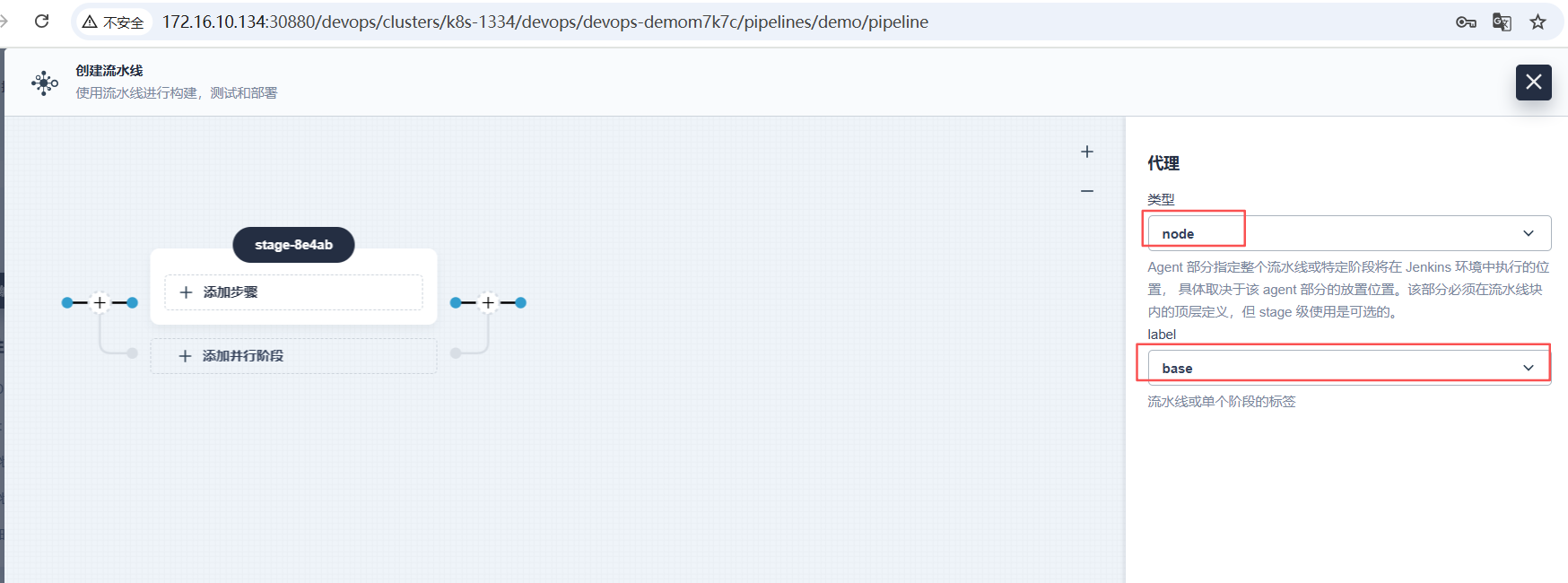

意思是用基础环境的jenkins动态agent演示,不带mvn或node.js环境

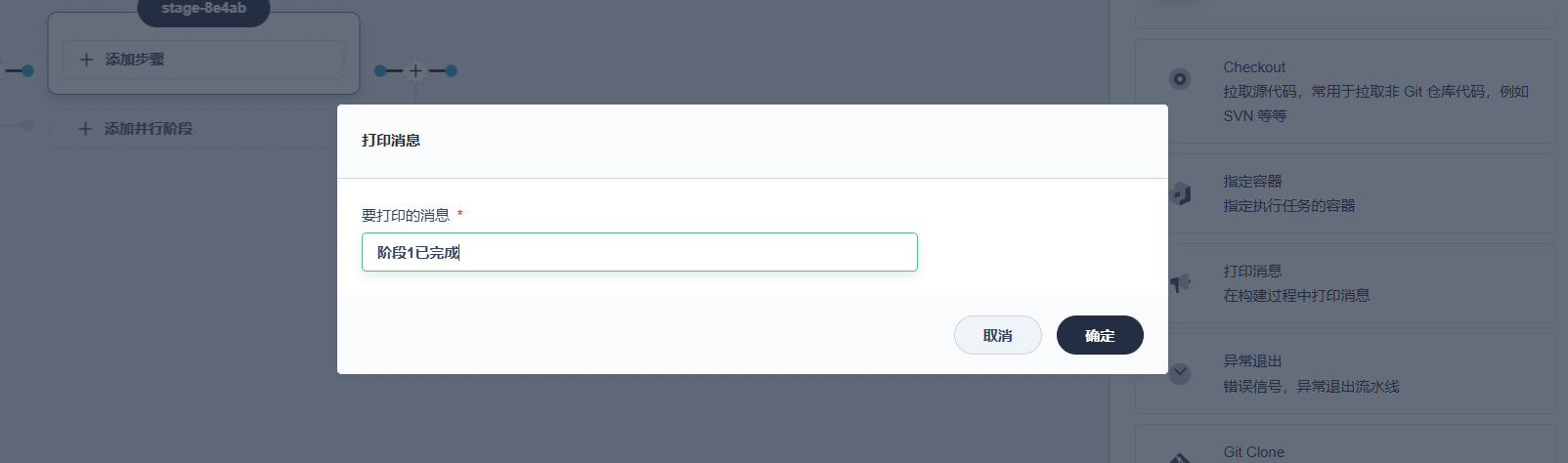

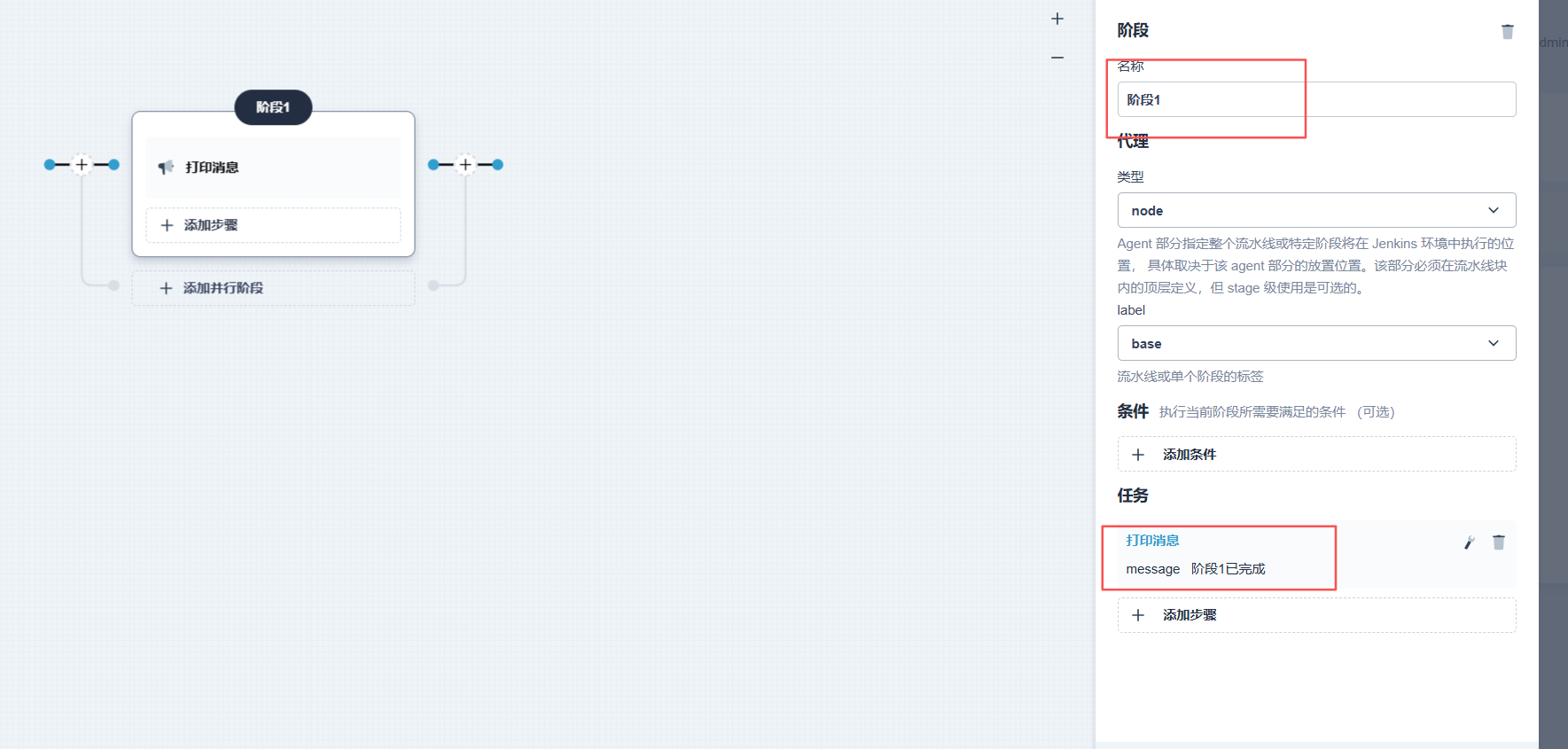

选一个打印消息

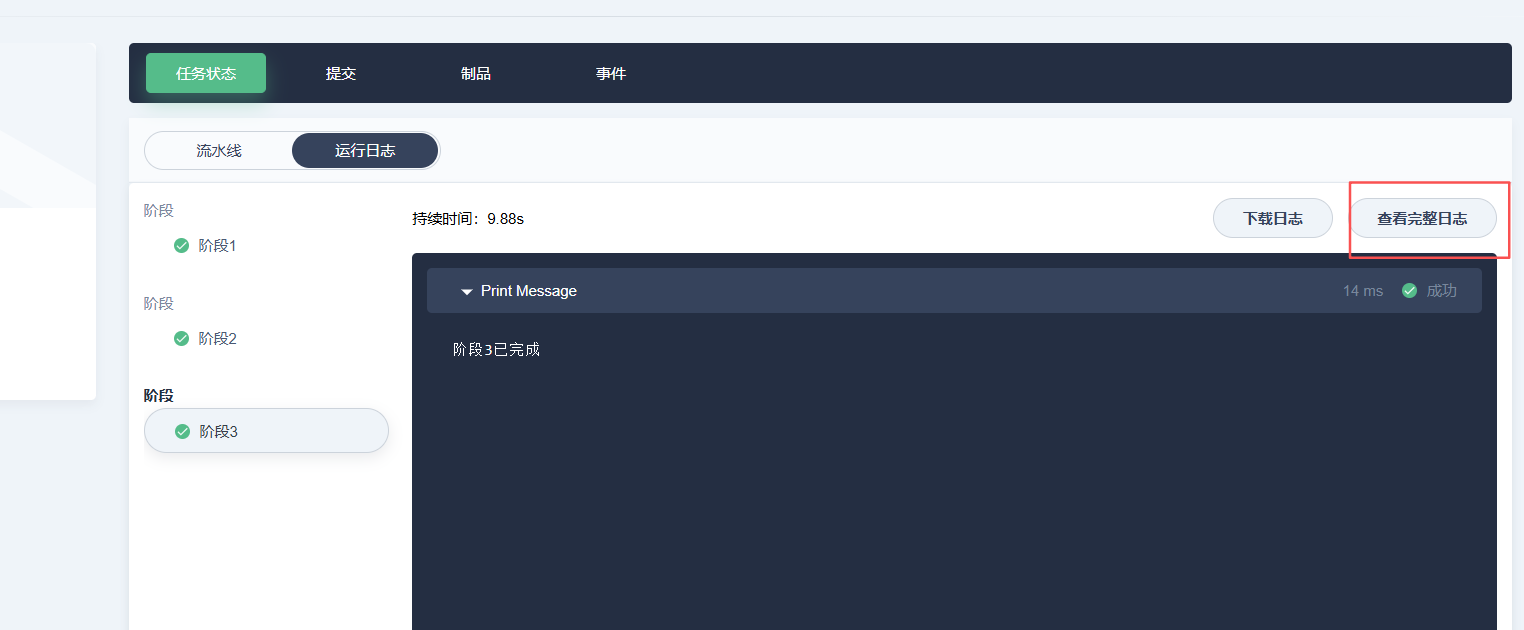

通常构建项目会有三到四个阶段,以此类推,建3个阶段,可查看jenkinsfile已自动生成,然后运行流水线。

2.4.3 授权账号项目角色、权限

一般来说,普通流水线运维成员,拥有企业空间的devops项目和项目(创建、执行流水线,一定程度上只放行执行权限也无可厚非)、集群管理权限(部署、查看部署情况、问题排查)即可

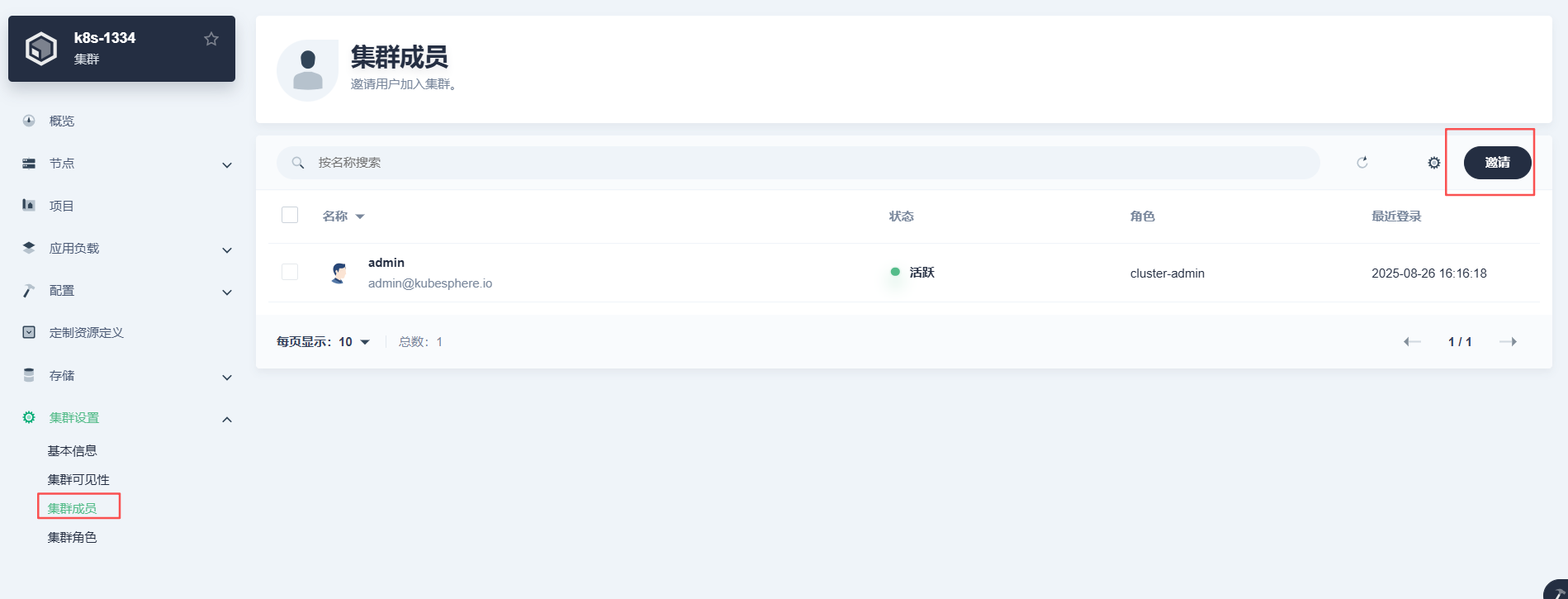

2.4.3.1 授权k8s集群

集群授权管理员身份,可以自由创建、部署资源

2.4.3.2 授权企业空间

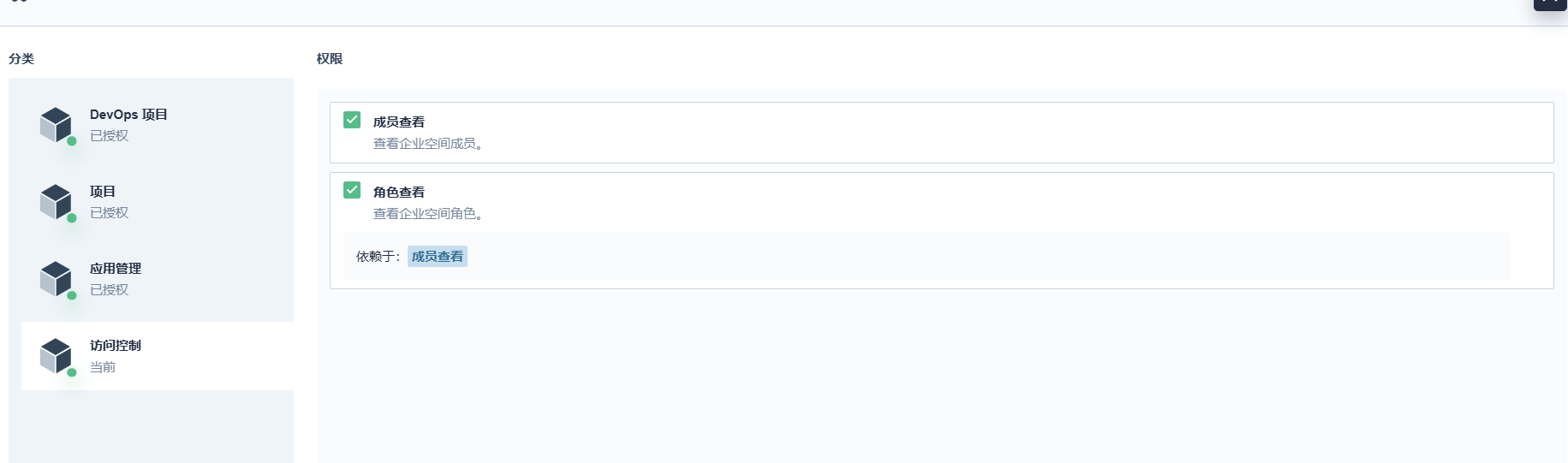

用admin管理员账号登录,来到上面创建的企业空间。假如模拟生产环境,控制权限。需要先设置企业空间角色

创建一个devops实施者,只可操作流水线

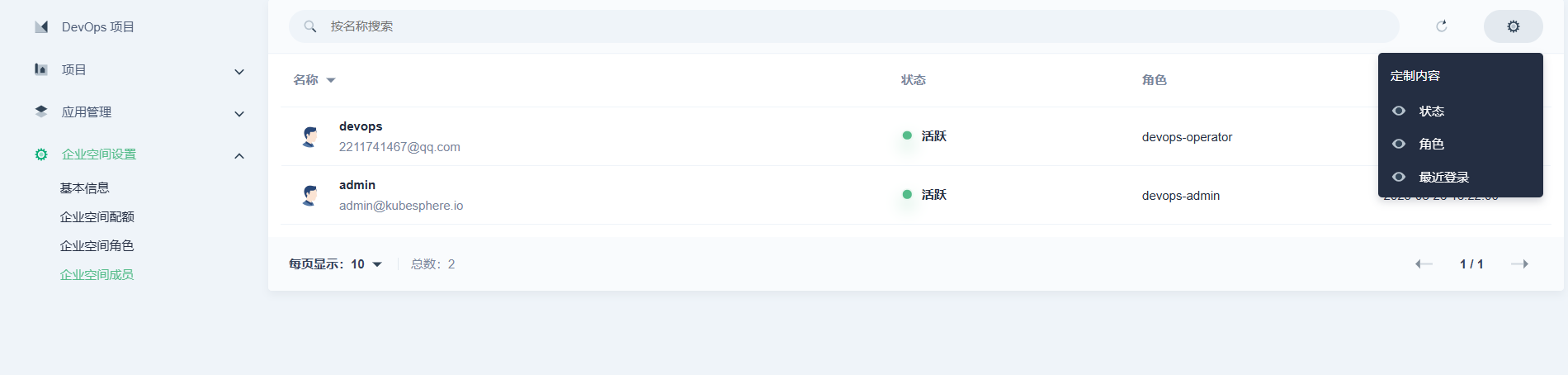

邀请企业空间成员

2.4.3.3 授权流水线

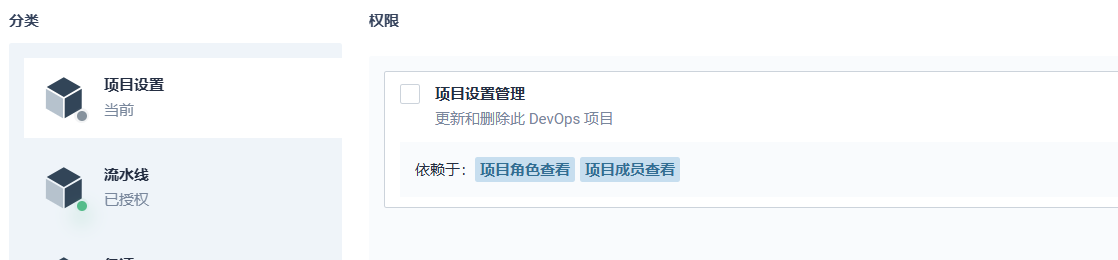

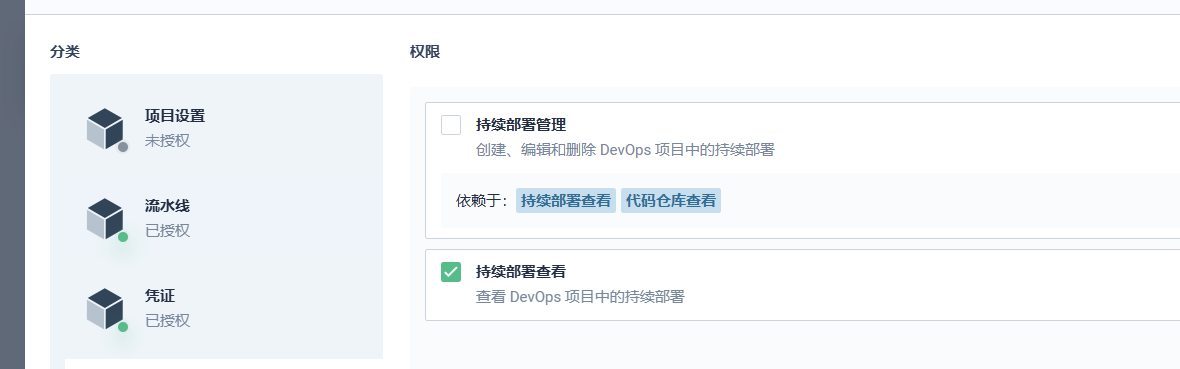

自带的operater权限没有大问题,但依然可以继续缩小权限,保证可以创建、运行流水线,也不越权。

权限详情

邀请devops加入该流水线

2.4.3.4 登录新成员账号

登录devops账号,已经可以查看企业空间和集群管理了,不再是空空如也。

2.4.3.4.1 检查企业空间相关权限

无权限管理企业空间

无权限管理devops项目

有权限管理流水线

无权限创建角色

有权限查看项目成员,无权限邀请成员

2.4.3.4.2 检查k8s集群权限

集群权限仅有管理员和观察者,devops为集群管理员,均可正常创建、操作、邀请成员,符合生产标准

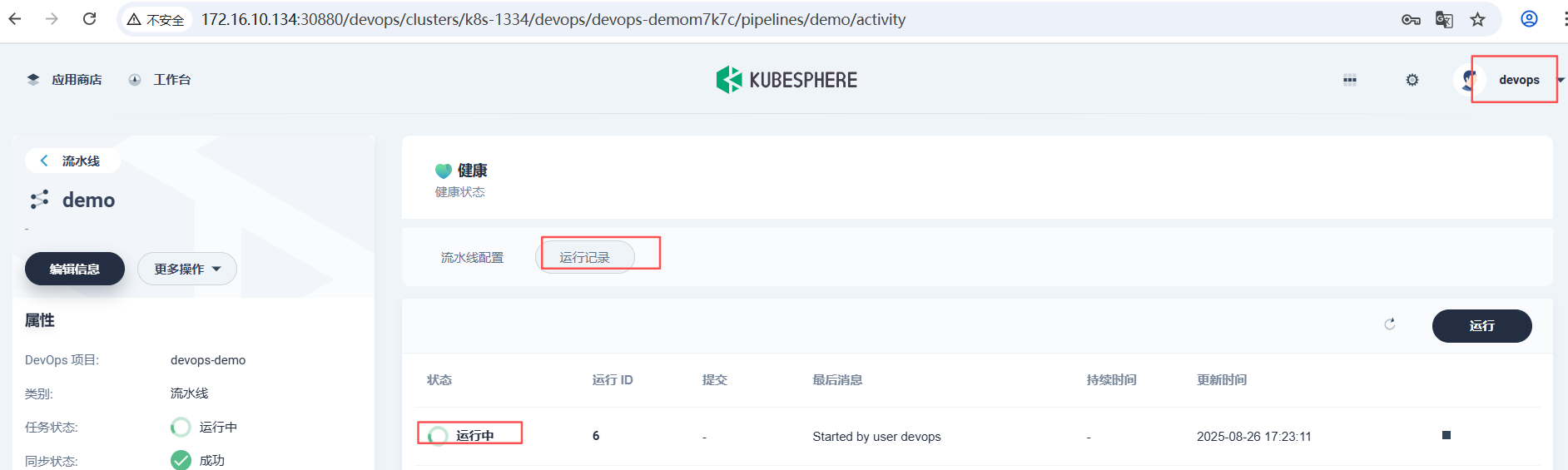

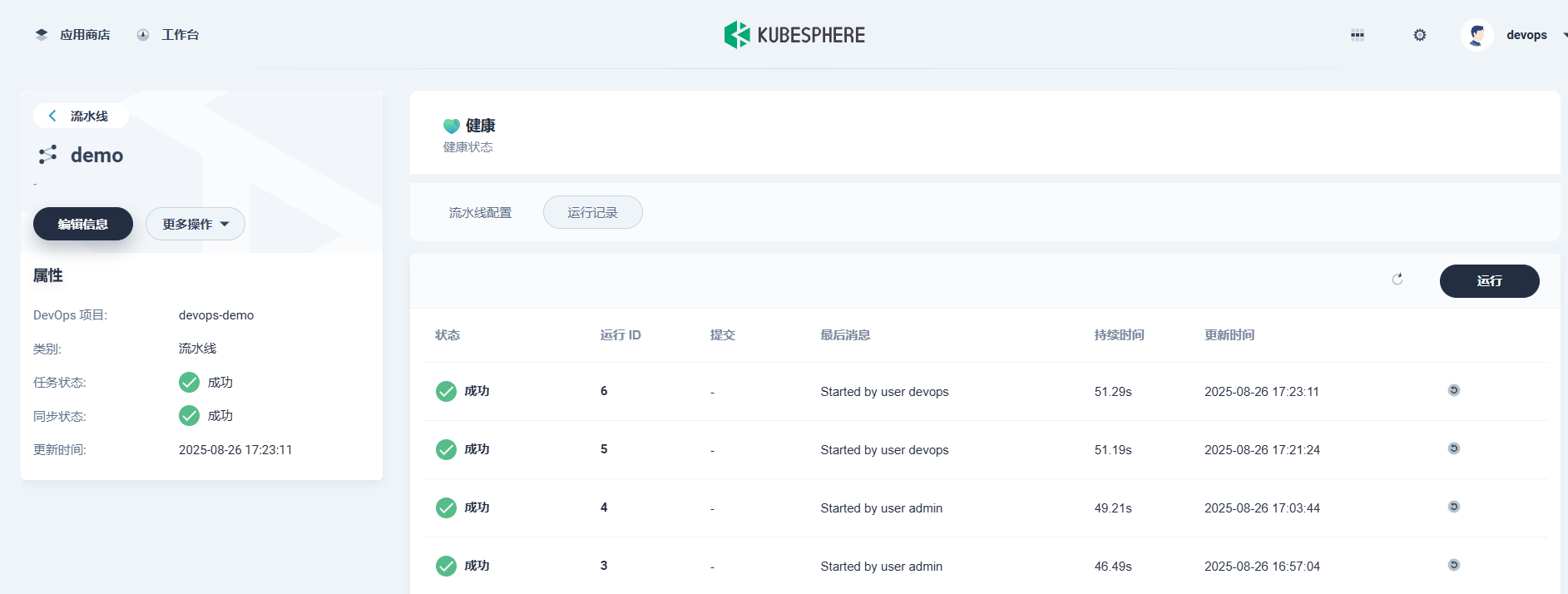

2.4.4 运行流水线

用devops账号操作

这两个地方均可以查看本次的流水线运行情况

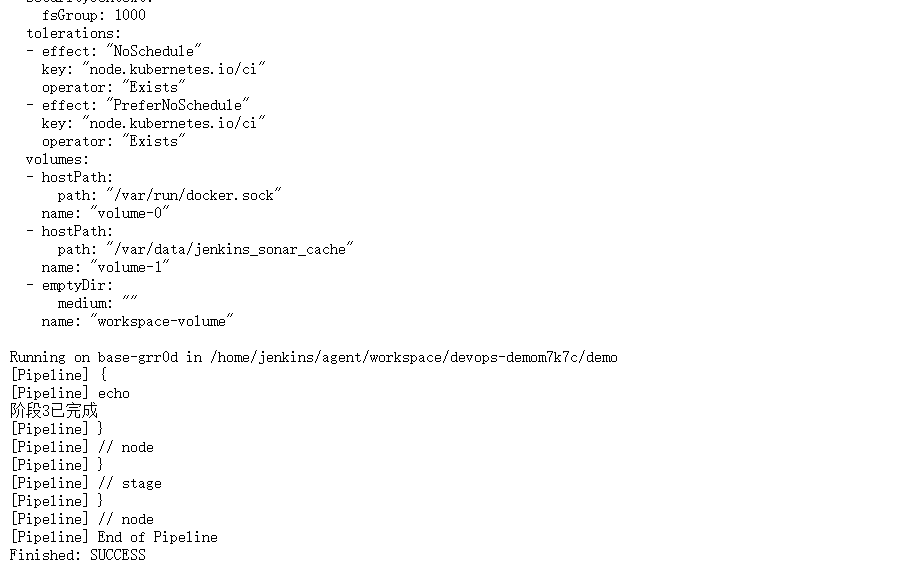

稍等片刻,完成了三个阶段流水线,也可以查看或下载完整日志,便于实际使用场景的问题排查

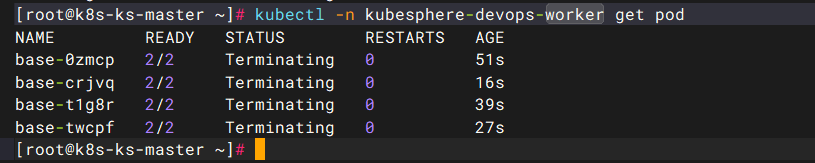

其中流水线运行过程中,会生成一些基础的jenkins-agent动态pod,命名空间是kubesphere-devops-worker,完成各阶段后会自动删除。

评论区